A Tutorial for Developing SYCL Kernels

Editor’s note: This tutorial was adapted, with his permission and assitance, from Jeff Hammond's GitHub* repository.

This is an introduction to the DPC++ and SYCL coding. DPC++ is an LLVM C++ compiler to implement the SYCL standard.

Who Is This Tutorial For?

This tutorial is for programmers who already have a decent understanding of C++ and parallelism. Teaching C++ and parallelism is hard and many materials already exist. There's far less information on SYCL itself and even less about DPC++ so that's our focus.

SYCL is derived from OpenCL technology, and the run models are similar. For help with the models, see this overview.

Who Is This Tutorial Not For?

If you like modern C++, you'll like SYCL because it’s definitely modern C++. Conversely, if you hate C++, you'll hate SYCL and DPC++. So, if you don’t want to write modern C++, this tutorial is not for you.

OpenMP 5.0 offers many of the same features as SYCL and DPC++ but supports the ISO language triumvirate of C++, C, and Fortran. To program CPUs and GPUs using Fortran, C, or premodern C++ (before C++11) using an open-industry standard, try the OpenMP code.

Another alternative to SYCL and DPC++ without C++ is OpenCL code. OpenCL code is more verbose than SYCL, but if you're a C programmer, you likely prefer explicit control to syntactic efficiency.

The Tutorial

Let's start with vector addition, which is the “Hello, world!” of high-performance computing (HPC) and numerical computation. Printing “Hello, world!” doesn’t make sense in a programming model used for doing lots of things in parallel.

Vector Addition in SYCL

The operation called single-precision A times X plus Y (SAXPY) can be implemented in C or C++ as follows:

There are lots of ways to write this in C++. For example, you can use ranges that makes the code look a more like the upcoming SYCL version. But teaching you every possible way to write a loop in C++ isn’t the point of this tutorial, and the version that looks like C is common knowledge.

Here’s the same loop in SYCL. Let's break down in pieces:

As you might have guessed, parallel_for is a parallel-for loop. The loop body is expressed as a lambda. The lambda is the code that looks like [..]{..}.

The loop iterator is expressed in terms of a sycl::range and a sycl::id. In our simple example, both are one dimension, as indicated by the <1>. SYCL ranges and IDs can be one-, two-, or three-dimensional. (The OpenCL technology and CUDA* have the same limitation.)

It may be a bit unfamiliar to write loops like this, but it’s consistent with how lambdas work. However, the pattern is familiar if you ever used Parallel STL, oneAPI Threading Building Blocks, Kokkos, or RAJA.

You might be wondering about the <class saxpy> template argument to parallel_for. This is just a way to name the kernel, which is necessary for using SYCL with a different host C++ compiler than the SYCL device compiler. In this case, the two compilers need a way to agree on the kernel name. In many SYCL compilers, such as the Intel® oneAPI DPC++ Compiler, this isn’t necessary. The option -fsycl-unnamed-lambda instructs the compiler to not look for names.

The purpose of h in h.parallel_for is covered later in this article.

SYCL Queues

One challenge of heterogeneous programming is the multiple types of processing elements and often different memory types. These things make compilers and runtimes more complicated. The SYCL programming model embraces heterogeneous running, although at a much higher level than the OpenCL technology. Not everything is explicit, either. Unlike other popular GPU programming models, SYCL kernels can be put in-line into the host program flow, which improves readability.

Whenever you want to compute on a device, you need to create a work queue:

The default selector favors a GPU, if present, and a CPU otherwise. Create queues associated with specific device types using this code:

The host and CPU selectors may lead to significantly different results, even though they target the same hardware. The host selector might use a sequential implementation optimized for debugging while the CPU selector uses the OpenCL Runtime and runs across all the cores. Also, the OpenCL technology just-in-time compiler might generate different code because it’s using a different compiler altogether. Don’t assume that just because the host is a CPU, that host and CPU mean the same thing in SYCL.

Manage Data in SYCL Using Buffers

The canonical way to manage data in SYCL is with buffers. A SYCL buffer is an opaque container. This is an elegant design, but some applications need pointers, which are provided by the Unified Shared Memory (USM) extension, discussed later.

In the previous example, you allocate a C++ container on the host and then hands it over to SYCL. Until the destructor of the SYCL buffer is invoked, you can only access the data through a SYCL mechanism. SYCL accessors are the important aspect of SYCL data management with buffers (explained later).

Control Device Execution

Because device code may require a different compiler or code generation mechanism from the host, it’s necessary to clearly identify sections of device code. The following image shows how this looks in SYCL 1.2.1. We use the submit method to enqueue work to the device queue, q. This method returns an opaque handler against which kernels run, in this case via parallel_for.

You can synchronize device execution using the wait() method. There are finer-grain methods for synchronizing device running, but we start with simplest one, which is a heavy hammer.

Some may find the previous code verbose, particularly when compared to models like Kokkos. The Intel oneAPI DPC++ Compiler supports a terse syntax, which is covered in the next section.

Compute Kernels and Buffers

SYCL accessors are the final piece in this SYCL program. Accessors may be unfamiliar to GPU programmers, but they have several nice properties compared to other methods. SYCL allows the programmer to move data explicitly using, for example, the copy() method. But, accessor methods don’t require this method because they generate a dataflow graph that the compiler and runtime can use to move data at the right time. This is effective when multiple kernels are invoked in sequence. In this case, the SYCL implementation deduces that data is reused and doesn't unnecessarily copy it back to the host. Also, you can schedule data movement asynchronously (where running devices overlap). While expert GPU programmers can do this manually, SYCL accessors often lead to better performance than OpenCL programs where programmers must move data explicitly.

Because programming models that assume pointers are handles to memory have a hard time with SYCL accessors, the USM extension makes accessors unnecessary. USM places a greater burden on the programmer in terms of data movement and synchronization but helps with compatibility in legacy code that wants to use pointers.

Review of Your First SYCL Program

Here are all of the components of the previously described SAXPY program in SYCL:

The full source code for this example is available in the GitHub repository.

SYCL 2020 USM

While the previous program is perfectly functional and can be implemented across a wide range of platforms, some users find it rather verbose. Furthermore, it’s not compatible with libraries and frameworks that need to manage memory using pointers. To address this issue with SYCL 1.2.1, Intel developed an extension in DPC++ called USM that supports pointer-based memory management.

USM supports two important usage models that are shown in the following image. The first one supports automatic data movement between the host and device. The second one is for explicit data movement to and from device allocations.

The details are in the SYCL 2020 provisional specification, but to get started, all you need to know is in the following image. The q argument is the queue associated with the device where the allocated data is stored (either permanently or temporarily):

If you're using device allocation, data must be explicitly moved (for example, using the SYCL memcpy method), which behaves the same as std::memcpy (the destination is on the left):

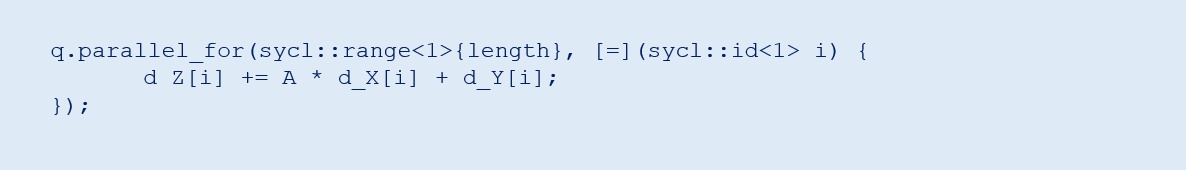

Accessors are no longer required in USM, which means you can simplify the previous kernel code to:

You can find the complete working examples of both versions of USM in the repositories named saxpy-usm.cc and saxpy-usm2.cc.

SYCL 2020 Terse Syntax

Finally, in case you're wondering why the opaque handler h was required in each of these programs, it turns out that it isn’t required after all. The following is an equivalent implementation that was added in the SYCL 2020 provisional specification. Also, you can take advantage of lambda names being optional in the SYCL 2020 provisional specification. Together, these two small changes make SYCL kernels the same length as the original C++ loop listed at the beginning of this tutorial:

You started with three lines of code that run sequentially on a CPU and ended with three lines of code that run in parallel on CPUs, GPUs, FPGAs, and other devices. Not everything is as simple as SAXPY, but now you know that SYCL doesn't make easy things hard. It builds on various modern C++ features and universal concepts like “parallel for” rather than introducing new things to learn.