Weigh the Tradeoffs to Maximize Performance

Gurbinder Gill, graduate research assistant, University of Texas at Austin, and Ramesh V. Peri, senior principal engineer, Intel Corporation

@IntelDevTools

Memory systems use non-uniform memory access (NUMA) architectures where cores and the total DRAM are divided among sockets. Each core can access the whole memory as a single address space. However, accessing the memory local to its local socket is faster than the remote socket―hence the non-uniform memory access. Because of the different access latency, access to the local socket memory should always be preferred.

To achieve this, the Linux* kernel does NUMA migrations, which try to move memory pages to the sockets where the data is being accessed. Linux maintains bookkeeping information―such as the number of memory accesses to the pages from a given socket and latency of accesses―to make decisions regarding page migration. NUMA migrations in Linux are enabled by default unless an oslevel NUMA allocation policy is specified using utilities such as numactl.

NUMA page migrations can be useful in scenarios where multiple applications are running on a single machine, each with its own memory allocation. In this multiapplication scenario, where the system is being shared, it makes sense to move memory pages belonging to a particular application closer to the cores assigned to that application.

This article argues that if a single application is using the entire machine―which is the most common scenario for high-performance applications―NUMA migrations can actually hurt performance. Also, using application-level NUMA allocation policies are often preferred over oslevel utilities such as numactl because they give finer control over the allocation of different data structures and design allocation policies.

Let's look at two application-level NUMA allocation polices (Figure 1):

- NUMA interleave, where memory pages are equally distributed among NUMA sockets in round-robin fashion (similar to the numactl -interleave all command).

- NUMA blocked, where equal chunks of the allocated memory are divided among NUMA sockets.

Figure 1. NUMA allocation policies (color-coded for two processors)

Evaluation on Intel® Xeon® Gold Processors

The efficacy of NUMA migrations is evaluated using a simple microbenchmark that allocates m amount of memory (using NUMA interleaved and blocked policies) and writes to each location once using t threads so that each thread gets a contiguous block to write sequentially.

The pseudocode memory allocation policies and simple computation are shown in Figure 2 and Figure 3, respectively. The experiments are conducted on a four-socket system with Intel® Xeon® Gold 5120 processors (56 cores with a clock rate of 2.2 GHz and 187 GB of DDR4 DRAM). Hyperthreading was disabled during the evaluation.

Figure 2. Pseudocode showing the memory allocation policies: NUMA interleaved and NUMA blocked

Figure 3. Pseudocode showing the simple microbenchmark computation used in this study

Effect of NUMA Migration for Different NUMA Allocation Policies

Figure 4 shows the time of the microbenchmark using t = 56 threads and interleaved allocation as memory allocation size (m) increases (Figure 1). Doubling the workload doubles the runtime, which is expected, but the number of pages migrated while running also increases significantly. A similar pattern happens for NUMA blocked allocation (Figure 5), but blocked allocation gives better performance because no page migration is required up to a workload size of 40 GB. The memory pages are allocated and accessed locally during the computation.

Figure 4. This microbenchmark uses 56 threads and NUMA interleaved allocation with increasing workload size. The number on the bars shows how many memory pages migrated (in thousands).

Figure 5. A microbenchmark using 56 threads and NUMA blocked allocation with increasing workload size. The number on the bars shows how many memory pages migrated (in thousands).

Effect of NUMA Migration on a Single Socket

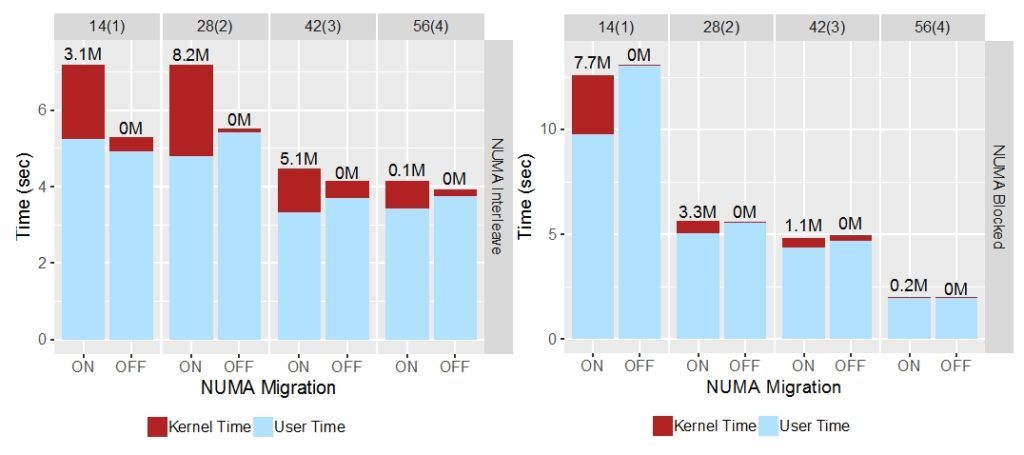

Figure 6 shows the total time taken with a 160 GB workload using different numbers of threads on a single socket, as well as the time spent in user code and kernel code. Since the total memory is equally divided among sockets, each socket has approximately 47 GB of memory (187 GB divided among four sockets), and 160 GB is allocated across all four sockets. The microbenchmark scales with the number of threads for both allocation policies. Increasing the number of threads decreased runtime, which in turn reduces the number of pages migrated because the longer an application runs, the more pages that the operating system kernel migrates.

The red part of the stacked plots shows the time spent in the kernel code to migrate pages. This is reduced to almost zero when NUMA migration is disabled. The geomean speedup gained by turning off NUMA migrations is 2.4x for interleave and 1.6x for blocked, which shows that NUMA migration has a significant impact on performance.

Figure 6. Microbenchmark with fixed workload size (160 GB) using a different number of threads on a single socket. The number of threads is at the top with the number of sockets in parentheses. The number on the bars shows how many memory pages migrated (in millions).

The Effect of NUMA Migration across Multiple Sockets

A pattern similar to a single socket (Figure 6) is also observed when going beyond one socket (Figure 7). Each chart shows the performance with and without NUMA migration as the number of sockets increases. All the cores on the sockets are used. Note that the time spent in the kernel is always reduced when NUMA migration is disabled. Another interesting thing to note is that the time spent in the user code increases slightly when NUMA migration is disabled, indicating that NUMA migrations reduce memory access latency. However, the overhead of NUMA migrations can outweigh the benefits and end up hurting overall performance.

Figure 7 – Microbenchmark with fixed workload size (160 GB) using a different number of threads on different sockets. The number of threads is at the top, with the number of sockets in parentheses. The number on the bars shows how many memory pages migrated (in millions).

Maximize Performance

From the results, you can conclude that operating-system-level features (such as NUMA migrations) must be used with caution: They can have significant performance overhead, especially for single applications running on the entire machine—the most common scenario for high-performance computations.

The effect of NUMA migrations on the runtime of an application depends on various factors such as:

- Type of NUMA allocation policies used

- Number of sockets used on the processor

To avoid the performance noise introduced by NUMA page migrations, ensure that operating-system-level features are turned off (NUMA migrations are on by default) as shown in Figure 8:

Figure 8. Turn off operating-system-level features

References

- Numactl Utility

- David Ott, Optimizing Applications for NUMA (Intel Corporation, 2011)

______

You May Also Like

Intel® VTune™ Profiler

Find and optimize performance bottlenecks across CPU, GPU, and FPGA systems. Part of the Intel® oneAPI Base Toolkit.

Download the Base Toolkit

See All Tools