Overview

In this article, we will be configuring Open vSwitch* with Data Plane Development Kit (OvS-DPDK) on Ubuntu Server* 17.04. With the new release of this package, OvS-DPDK has been updated to use the latest release of both the DPDK (v16.11.1) and Open vSwitch (v2.6.1) projects. We took it for a test drive and were impressed with how seamless and easy it is to use OvS-DPDK on Ubuntu*.

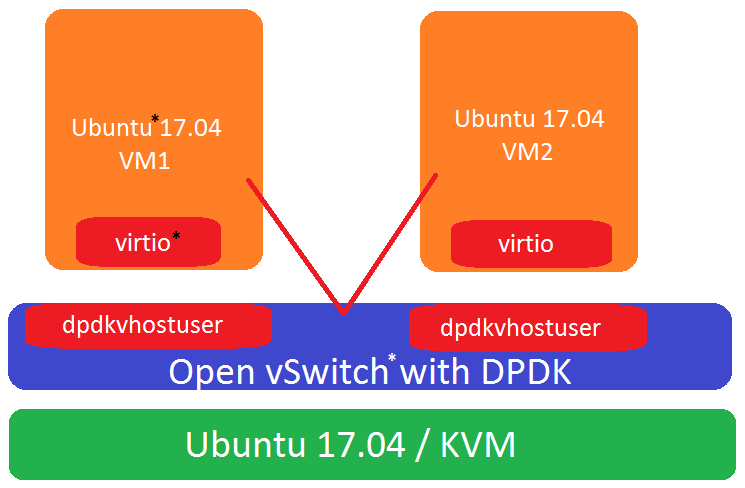

We configured OvS-DPDK with two vhost-user ports and allocated them to two virtual machines (VMs). We then ran a simple iPerf3* test case. The following diagram captures the setup.

Test-Case Configuration

Installing OvS-DPDK using Advanced Packaging Tool (APT)

To install OvS-DPDK on our system, run the following commands. Also, we will update ovs-vswitchd to use the ovs-vswitchd-dpdk package.

sudo apt-get install openvswitch-switch-dpdk

sudo update-alternatives --set ovs-vswitchd /usr/lib/openvswitch-switch

-dpdk/ovs-vswitchd-dpdkThen restart the ovs-vswitchd service with the following command to use the DPDK:

sudo systemctl restart openvswitch-switch.serviceConfiguring Ubuntu* Server 17.04 for OvS-DPDK

The system we are using in this demo is a 2-socket, 22 cores per socket, Intel® Hyper-Threading Technology (Intel® HT Technology) enabled server, giving us 88 logical cores total. The CPU model used is an Intel® Xeon® CPU E5-2699 v4 @ 2.20GHz. To configure Ubuntu for optimal use of OvS-DPDK, we will change the GRUB* command-line options that are passed to Ubuntu at boot time for our system. To do this we will edit the following config file:

/etc/default/grub

Change the setting GRUB_CMDLINE_LINUX_DEFAULT to the following:GRUB_CMDLINE_LINUX_DEFAULT="default_hugepagesz=1G hugepagesz=1G hugepages=16 hugepagesz=2M hugepages=2048 iommu=pt intel_iommu=on isolcpus=1-21,23-43,45-65,67-87"This makes GRUB aware of the new options to pass to Ubuntu during boot time. We set isolcpus so that the Linux* scheduler would only run on two physical cores. Later, we will allocate the remaining cores to the DPDK. Also, we set the number of pages and page size for hugepages. For details on why hugepages are required, and how they can help to improve performance, please see the explanation in the Getting Started Guide for Linux on dpdk.org.

Note: The isolcpus setting varies depending on how many cores are available per CPU.

Also, we will edit /etc/dpdk/dpdk.conf to specify the number of hugepages to reserve on system boot. Uncomment and change the setting NR_1G_PAGES to the following:

NR_1G_PAGES=8Depending on your system memory size, you may increase or decrease the number of 1G pages.

After both files have been updated run the following commands:

sudo update-grub

sudo reboot

A reboot will apply the new settings. Also during the boot enter the BIOS and enable:

- Intel® Virtualization Technology (Intel® VT-x)

- Intel® Virtualization Technology (Intel® VT) for Directed I/O (Intel® VT-d)

Once logged back into your Ubuntu session we will create a mount path for our hugepages:

sudo mkdir -p /mnt/huge

sudo mkdir -p /mnt/huge_2mb

sudo mount -t hugetlbfs none /mnt/huge

sudo mount -t hugetlbfs none /mnt/huge_2mb -o pagesize=2MB

sudo mount -t hugetlbfs none /dev/hugepages

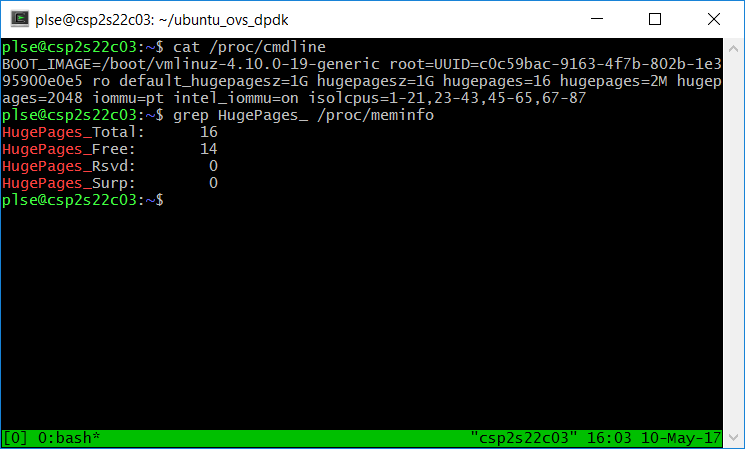

To ensure that the changes are in effect, run the commands below:

grep HugePages_ /proc/meminfo

cat /proc/cmdline

If the changes took place, your output from the above commands should look similar to the image below:

Configuring OvS-DPDK Settings

To initialize the ovs-vsctl database, a one-time step, we will run the command ‘sudo ovs-vsctl --no-wait init’. The OvS database will contain user set options for OvS and the DPDK. To pass in arguments to the DPDK we will use the command-line utility as follows:

‘sudo ovs-vsctl ovs-vsctl set Open_vSwitch . <argument>’.Additionally, the OvS-DPDK package relies on the following config files:

/etc/dpdk/dpdk.conf – Configures hugepages

/etc/dpdk/interfaces – Configures/assigns network interface cards (NICs) for DPDK use

For more information on OvS-DPDK, unzip the following files:

- /usr/share/doc/openvswitch-common/INSTALL.DPDK.md.gz

- OvS DPDK install guide

- /usr/share/doc/openvswitch-common/INSTALL.DPDK-ADVANCED.md.gz

- Advanced OvS DPDK install guide

Next, we will configure OvS to use DPDK with the following command:

sudo ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=trueOnce the OvS is set up to use DPDK, we will change one OvS setting, two important DPDK configuration settings, and bind our NIC devices to the DPDK.

DPDK Settings

- dpdk-lcore-mask: Specifies the CPU cores on which dpdk lcore threads should be spawned. A hex string is expected.

- dpdk-socket-mem: Comma-separated list of memory to preallocate from hugepages on specific sockets.

OvS Settings

- pmd-cpu (poll mode drive-mask: PMD (poll-mode driver) threads can be created and pinned to CPU cores by explicitly specifying pmd-cpu-mask. These threads poll the DPDK devices for new packets instead of having the NIC driver send an interrupt when a new packet arrives.

The following commands are used to configure these settings:

sudo ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0xfffffbffffefffffbffffe

sudo ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024,1024"

sudo ovs-vsctl set Open_vSwitch . other_config:pmd-cpu-mask=1E0000000001EFor dpdk-lcore-mask we used a mask of 0xfffffbffffefffffbffffe to specify the CPU cores on which dpdk-lcore should spawn. In our system, we have the dpdk-lcore threads spawn on all cores except cores 0, 22, 44, and 66. Those cores are reserved for the Linux scheduler. Similarly, for the pmd-cpu-mask, we used the mask 1E0000000001E to spawn four pmd threads for non-uniform memory access (NUMA) Node 0, and another four pmd threads for NUMA Node 1. Lastly, since we have a two-socket system, we allocate 1 GB of memory per NUMA Node; that is, “1024, 1024”. For a single-socket system, the string would just be “1024”.

Creating OvS-DPDK Bridge and Ports

For our sample test case, we will create a bridge and add two DPDK vhost-user ports. To create an OvS bridge and two DPDK ports, run the following commands:

sudo ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev

sudo ovs-vsctl add-port br0 vhost-user1 -- set Interface vhost-user1 type=dpdkvhostuser

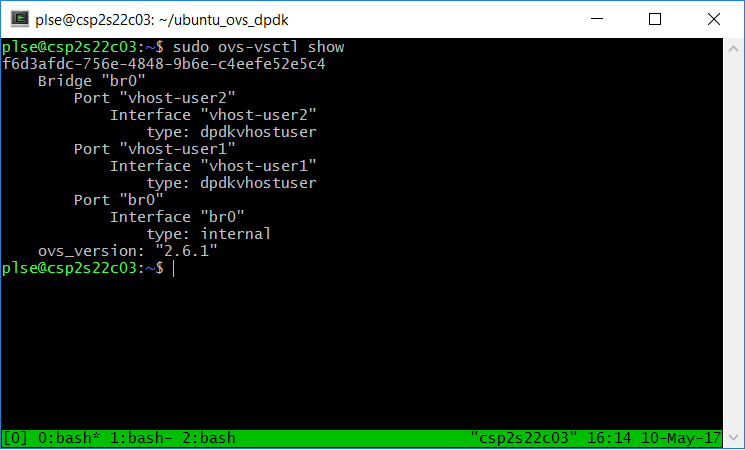

sudo ovs-vsctl add-port br0 vhost-user2 -- set Interface vhost-user2 type=dpdkvhostuserTo ensure that the bridge and vhost-user ports have been properly set up and configured, run the command:

sudo ovs-vsctl showIf all is successful you should see output like the image below:

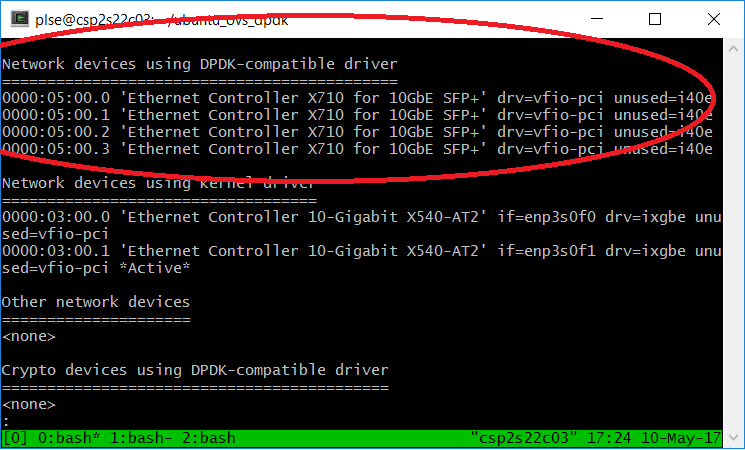

Binding Devices to DPDK

To bind your NIC device to the DPDK you must run the dpdk-devbind command. For example, to bind eth1 from the current driver and move to use vfio-pci driver, run:dpdk-devbind --bind=vfio-pci eth1.To use the vfio-pci driver, run modsprobe to load it and its dependencies.

This is what it looked like on my system, with 4 x 10 Gb interfaces available:

sudo modprobe vfio-pci

sudo dpdk-devbind --bind=vfio-pci ens785f0

sudo dpdk-devbind --bind=vfio-pci ens785f1

sudo dpdk-devbind --bind=vfio-pci ens785f2

sudo dpdk-devbind --bind=vfio-pci ens785f3

To check whether the NIC cards you specified are bound to the DPDK, run the command:

sudo dpdk-devbind --statusIf all is correct, you should have an output similar to the image below:

Using DPDK vhost-user Ports with VMs

Creating VMs is out of the scope of this document. Once we have two VMs created (in this example, virtual disks us17_04vm1.qcow2 and us17_04vm2.qcow2), the following commands show how to use the DPDK vhost-user ports we created earlier.

Ensure that the QEMU* version on the system is v2.2.0 or above, as discussed under “DPDK vhost-user Prerequisites” in the OvS DPDK Install Guide on https://github.com/openvswitch.

sudo qemu-system-x86_64 -m 1024 -smp 4 -cpu host -hda /home/user/us17_04vm1.qcow2 -boot c -enable-kvm -no-reboot -net none -nographic \

-chardev socket,id=char1,path=/run/openvswitch/vhost-user1 \

-netdev type=vhost-user,id=mynet1,chardev=char1,vhostforce \

-device virtio-net-pci,mac=00:00:00:00:00:01,netdev=mynet1 \

-object memory-backend-file,id=mem,size=1G,mem-path=/dev/hugepages,share=on

-numa node,memdev=mem -mem-prealloc \

-virtfs local,path=/home/user/iperf_debs,mount_tag=host0,security_model=none,id=vm1_dev

sudo qemu-system-x86_64 -m 1024 -smp 4 -cpu host -hda /home/user/us17_04vm2.qcow2 -boot c -enable-kvm -no-reboot -net none -nographic \

-chardev socket,id=char2,path=/run/openvswitch/vhost-user2 \

-netdev type=vhost-user,id=mynet2,chardev=char2,vhostforce \

-device virtio-net-pci,mac=00:00:00:00:00:02,netdev=mynet2 \

-object memory-backend-file,id=mem,size=1G,mem-path=/dev/hugepages,share=on

-numa node,memdev=mem -mem-prealloc \

-virtfs local,path=/home/user/iperf_debs,mount_tag=host0,security_model=none,id=vm2_dev \

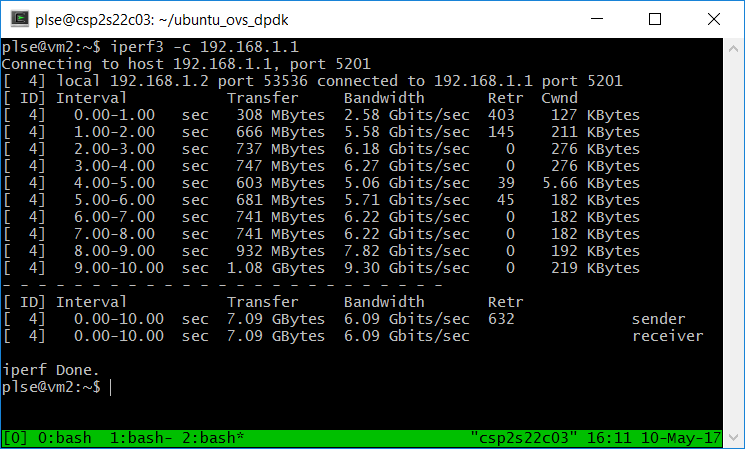

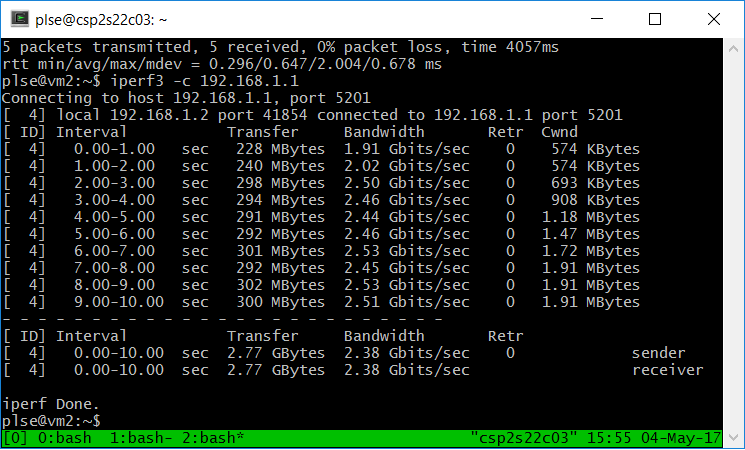

DPDK vhost-user inter-VM Test Case with iPerf3

In the previous step, we configured two VMs, each with a Virtio* NIC that is connected to the OvS-DPDK bridge.

Configure the NIC IP address on both VMs to be on the same subnet. Install iPerf3 from http://software.es.net/iperf, and then run a simple network test case. On one VM, start iPerf3 in server mode iperf3 -s and run the iperf3 client on another VM, iperf3 –c server_ip. The network throughput and performance varies, depending on your system hardware capabilities and configuration.

OvS Using DPDK

OvS Without DPDK

From the above images, we observe that the OvS-DPDK transfer rate is roughly ~2.5x greater than OvS without DPDK.

Summary

Ubuntu has standard packages available for using OvS-DPDK. In this article, we discussed how to install, configure, and use this package for enhanced network throughput and performance. We also covered how to configure a simple OvS-DPDK bridge with DPDK vhost-user ports for an inter-VM application use case. Lastly, we observed that the OvS with DPDK gave us ~2.5x greater transfer rate than OvS without DPDK on a simple inter-vm test case on our system.

About the Author

Yaser Ahmed is a software engineer at Intel Corporation who has an MS degree in Applied Statistics from DePaul University and a BS degree in Electrical Engineering from the University of Minnesota.

"