Introduction

Intel® Optane™ persistent memory (PMem) is a disruptive technology that creates a new tier between memory and storage. Intel Optane™ PMem modules support two modes: Memory Mode for volatile use cases and App Direct Mode that provides byte-addressable persistent storage.

In this guide, we provide instructions for configuring and managing Intel Optane PMem modules using the following tools:

- ipmctl - Intel Persistent Memory Control - is an open-source utility created and maintained by Intel specifically for configuring and managing Intel Optane memory modules. It is available for both Linux and Windows* from the ipmctl project page on GitHub*.

- ndctl - Non-Volatile Device Control is an open-source utility used for managing namespaces in Linux for Persistent Memory namespace management.

- PowerShell* - Microsoft* has created PowerShell cmdlets for Persistent Memory disk management

This quick start guide (QSG) has three sections:

- Quick Start Guide Part 1: Persistent Memory Provisioning Introduction

- Quick Start Guide Part 2: Provisioning for Intel® Optane™ Persistent Memory on Linux

- Quick Start Guide Part 3: Provisioning for Intel® Optane™ Persistent Memory on Microsoft Windows

Persistent Memory Provisioning Concepts

This section describes the basic terminology and concepts applicable to the configuration and management of Non-Volatile Dual In-line Memory Modules (NVDIMMs), including PMem.

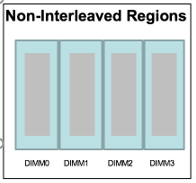

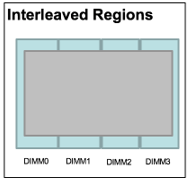

Region

A region is a group of one or more PMem modules, also known as an interleaved set. The Regions are created in interleaved or non-interleaved configurations. Interleaving is a technique that makes multiple PMem devices appear as a single logical virtual address space. It allows spreading adjacent virtual addresses within a page across multiple memory devices. This hardware-level parallelism increases the available bandwidth from the devices.

Regions are created when the platform configuration goal is defined – Memory Mode or AppDirect. The configuration goal can be created, changed, or modified using the ipmctl utility or options in the BIOS.

Label

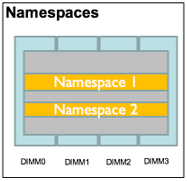

Each PMem module contains a Label Storage Area (LSA), which includes the configuration metadata to define a namespace. Think of namespaces as a way to divide the available space within a region in a similar way in which one would use partitions with regular HDDs and SSDs.

Namespace

A namespace defines a contiguously addressed range of non-volatile memory conceptually similar to a hard disk partition, SCSI logical unit (LUN), or an NVM Express* namespace. The PMem storage unit appears in /dev as a device used for input/output (I/O). Namespaces are associated with App Direct Mode only. Intel recommends using the ndctl utility for creating namespaces for the Linux operating system and PowerShell cmdlets on Microsoft Windows.

|

|

|

DAX

Direct Access (DAX) is described in the Persistent Memory Programming Model. It allows applications to directly access persistent media using memory-mapped files residing on a DAX aware file system. File systems that support DAX include Linux ext4 and XFS, and Windows NTFS. These file systems bypass the kernel, page cache, I/O subsystem and avoid interrupts and context switching, allowing the application to perform byte-addressable load/store memory operations. For PMem, this is the fastest and shortest path to stored data. Eliminating the I/O stack's software overhead is essential to take advantage of persistent memory's extremely low latencies and high bandwidth capabilities, which is critical for small and random I/O operations.

Namespace Types

When a host is configured in AppDirect, the namespace can be provisioned in one of the following modes:

- Filesystem-DAX (FSDAX) is the default mode of a namespace when specifying ndctl create-namespace with no options. The DAX capability enables workloads / working-sets that would exceed the page cache's capacity to scale up to the capacity of PMem. Workloads that fit in page cache or perform bulk data transfers may not see benefit from DAX. When in doubt, pick this mode.

- Device Dax (DEVDAX) enables similar mmap(2) DAX mapping capabilities as Filesystem-DAX. However, instead of a block-device that can support a DAX-enabled filesystem, this mode emits a single character device file (/dev/daxX.Y). Use this mode to assign PMem to a virtual-machine, register persistent memory for RDMA, or when gigantic mappings are needed.

- Sector can host legacy filesystems that do not checksum metadata or applications that are not prepared for torn sectors after a crash. The expected usage for this mode is for small boot volumes. This mode is compatible with other operating systems.

- Raw mode is effectively just a memory disk that does not support DAX. This mode is compatible with other operating systems but does not support DAX operation.

Which PMem Mode to Use

Intel Optane PMem is configured into one of two modes - Memory Mode or App Direct Mode. It is possible to configure a system using a combination of the two, often referred to as Mixed Mode, where some of the capacity for Memory Mode and some for App Direct. In general, we can say that Memory Mode is used by unmodified applications constrained by main memory size and only require volatile memory. In contrast, App Direct provides non-volatile access to persistent memory that helps reduce I/O bottlenecks.

Optimal performance is gained when application developers determine which data structures belong to the memory and storage tiers: DRAM, PMem, and non-volatile storage (SSDs, HDDs). With the aid of profiling tools, developers can characterize application workloads and evaluate which mode best fits each application. Applications should be tested in both modes to fully determine what optimizations are necessary to achieve the maximum performance and benefits of PMem.

For an application to take advantage of App Direct, the application required some code changes. Although not mandatory, we recommend using the libraries from the Persistent Memory Developer Kit (PMDK). These libraries are open source and make adoption more manageable and less error-prone.

More information about these topics:

- Article: Intel Optane Persistent Memory Operating Modes Explained

- Article: Intel Optane Persistent Memory analysis tools on the Intel® Developer Zone

- Video: Operating Modes of Intel® Optane™ DC Persistent Memory

Memory Mode

In Memory Mode, the installed Intel® Optane™ PMem modules act as main system memory, and the DRAM capacity becomes an extra level of cache. The CPU's memory controller manages the persistent memory capacity, which means that the operating system does not have direct access. Memory Mode works well in situations where the application's working set fits within the DRAM size, but more capacity is needed to avoid paging from disk.

Although the media is natively persistent, data is always volatile in Memory mode by cryptographically erasing all the data during every system reboot.

App Direct Mode

In the App Direct mode, the PMem capacity is exposed to the operating system as an additional memory tier. The namespace type determines how the operating system exposes PMem to the application(s). For example, FSDAX is the default and recommended namespace type for most situations. These block devices are then used by applications to speed up their I/O paths by either (1) replacing traditional non-volatile devices—such as SSDs or HDDs—for their I/O needs, or (2) by directly persisting their data structures (avoiding the I/O stack altogether, using DAX),

Although the primary use case for App Direct Mode is data persistence, using it as an additional volatile memory pool is also possible.

Using this mode usually results in performance improvement, but not always. If I/O is done in large, sequential chunks, and the page cache effectively keeps the most used blocks in DRAM, traditional I/O can still be the best approach. Keep in mind, sometimes a large amount of DRAM is needed to make the page cache effective, and PMem can avoid that extra cost by not using a page cache at all. In general, PMem is better for small, random I/O. Applications can benefit from this by re-designing their I/O paths. Applications commonly use serialization, buffering, and large sequential I/O, which typically generates additional processing and I/O. Applications that use memory-mapped files on a DAX file system can directly persist their data structures in memory at byte granularity, significantly reducing or entirely removing the I/O.

Mixed Mode

When PMem is configured with only a portion of the capacity being assign as Memory Mode( <100%), the remaining capacity is available to be used in App Direct Mode.

Note When part or all PMem module capacity is allocated to Memory mode, all the DRAM capacity becomes the last-level cache and is not available to the operating system or applications.

Hardware Requirements

The following hardware components are required:

- Main system board supporting 2nd generation Intel® Xeon® Scalable processors

- Intel® Optane™ persistent memory modules

- DRAM

To make purchases of Intel Optane Persistent memory, please contact your server OEM or supplier. You can learn more and request information on buying servers with PMem and other Intel technologies on the Intel Optane Persistent Memory website.

PMem Provisioning Overview

Provisioning PMem is a one or two-step process depending on the chosen mode – Memory Mode or App Direct.

- Configuring the Goal - During this process, a goal is specified and stored on the PMem modules and applied on the next reboot. A goal configures memory modules in Memory Mode, App Direct mode, or mixed mode. The goal is set in one of two ways:

- BIOS Provisioning - PMem modules can be provisioned using options provided in the BIOS. Refer to the documentation provided by your system vendor.

- Operating System utility - ipmctl is available for the UEFI shell and a command-line utility for both Linux and Windows. It can be used to configure the PMem in the platform.

- Configuring namespaces - Only App Direct Mode needs namespaces configured, and this is an OS-specific operation done with the following tools. Detailed instructions are defined in part 2 and part 3 of this guide.

- Linux uses the ndctl utility

- Windows uses Powershell

Software - ipmctl

ipmctl is necessary to discover PMem module resources, create goals and regions, update the firmware, and debug issues affecting PMem modules.

Note Although with ipmctl, the same commands are used in both the UEFI and the operating system versions of the utility, not all functionality is available at the operating system level. For example, the secure erasure features are not implemented.

The full list of commands is available by running ipmctl help or man ipmctl from the command line. You are required to have administrative privileges to run ipmctl.

Learn more about each of these tools and get started with PMem programming:

Using ipmctl within Unified Extensible Firmware Interface (UEFI)

ipmctl can be launched from a (UEFI) shell for management at the UEFI level. This Quick Start Guide does not cover this option.

Using ipmctl within an Operating System Environment

You can download ipmctl from its GitHub project page, where you will find the sources and the Windows binary. Linux binaries are available through the package manager of most Linux distributions.

All snippets and screenshots below are from a Linux system; however, the commands are the same between Windows and Linux.

Next Steps

This ends the OS-agnostic portion of the QSG. The next two sections are the tutorials

- Quick Start Guide Part 2: Provisioning for Intel® Optane™ Persistent Memory on Linux

- Quick Start Guide Part 3: Provisioning for Intel® Optane™ Persistent Memory on Microsoft Windows

Appendixes

Appendix A: Frequently Asked Questions on Provisioning

When I configure in App Direct Mode, why do I see part of the capacity as "Inaccessible" and "Unconfigured"?

PMem reserves a certain amount of capacity for metadata. For first-generation Intel Optane PMem, it is approximately 0.5% per DIMM. Unconfigured capacity is the capacity that is not mapped into the system's physical address space. It could also be the capacity that is inaccessible because the processor does not support the entire capacity on the platform.

MemoryType | DDR | PMemModule | Total

==========================================================

Volatile | 189.500 GiB | 0.000 GiB | 189.500 GiB

AppDirect | - | 1512.000 GiB | 1512.000 GiB

Cache | 0.000 GiB | - | 0.000 GiB

Inaccessible | 2.500 GiB | 5.066 GiB | 7.566 GiB

Physical | 192.000 GiB | 1517.066 GiB | 1709.066 GiB

How do I find the firmware version on the modules?

# ipmctl show -dimm

My processor does not allow me to configure the entire PMem module capacity on the platform. How do I find out the maximum supported capacity on the processor?

Run the following command to display the maximum amount of memory allowed to be mapped into the system physical address space for this processor based on the processor SKU. For more information on the capacity supported by the processor SKU on your platform, run the following commands:

# ipmctl show -d TotalMappedMemory -socket

---SocketID=0x0000---

TotalMappedMemory=3203.0 GiB

---SocketID=0x0001---

TotalMappedMemory=3202.5 GiB

How do I unlock encrypted modules?

The ability to enable security, unlock a PMem region, and change passphrase is enabled in both the Linux kernel driver 5.0 using the ndctl command.

Does PMem support SMART errors?

Yes

How do I find a module's lifetime?

The remaining PMem module life as a percentage value of factory-expected life span can be found by running the following command:

# ipmctl show -o text -sensor percentageremaining -dimm

DimmID | Type | CurrentValue | CurrentState

============================================================

0x0001 | PercentageRemaining | 100% | Normal

0x0011 | PercentageRemaining | 100% | Normal

0x0021 | PercentageRemaining | 100% | Normal

When configured in Mixed mode, is the DRAM capacity seen by the operating system and applications?

No. When a portion or all of the capacity is configured in Memory mode, DRAM is not available to the operating system. The capacity configured in Memory Mode is the only capacity used as the main memory.

I am adding two new DIMMs and would like to erase all the existing configurations and safely reconfigure the hardware but could not delete regions.

To create a new configuration, you need to delete the existing goal and create a new one, as shown below. There is no need to delete regions. The force option (-f) will overwrite the current goal, and on reboot, you will see the new goal.

# ndctl destroy-namespace namespace0.0

or

# ndctl destroy-namespace -f all

# ipmctl delete -goal

Delete memory allocation goal from DIMM 0x0001: Success

Delete memory allocation goal from DIMM 0x0011: Success

Delete memory allocation goal from DIMM 0x0101: Success

Delete memory allocation goal from DIMM 0x0111: Success

# ipmctl create -f -goal persistentmemorytype=appdirect

Why do I see a certain amount of capacity shown as reserved?

Reserved capacity is the total PMem capacity that is reserved. This capacity is the PMem partition capacity (rounded down for alignment) less any App Direct capacity. Reserved capacity typically results from a Memory Allocation Goal request that specified the Reserved property. This capacity is not mapped to the system physical address (SPA) space.

What is the list of supported configurations, and what happens if I am not using one of these configurations?

A list of validated and popular configurations is provided in the System Requirements section of this document. Other configurations are likely not to be supported by the BIOS and may not boot.

Appendix B: List of ipmctl Commands

Memory Provisioning

|

Health and Performance

|

Security

|

Device Discovery

|

Support and Maintenance

|

Debugging

|

Appendix C: Configuration - Management

This Appendix is primarily to cover in a bit more details commands used in management that might be required during a re-configuration

Change Configuration Goal

When creating a goal, ipmctl checks to see whether or not the NVDIMMs are already configured. If so, it prints a message that the current configuration is deleted before creating a new goal. The current configuration can be deleted by first disabling and destroying namespaces and then disabling the active regions.

# ipmctl create -goal MemoryMode=100

Create region configuration goal failed: Error (115) - A requested DIMM already has a configured goal. Delete this existing goal before creating a new one

Delete Configuration

If you already have a goal configured, you will need to delete it before applying a new goal in its place. To disable/destroy a namespace, you will need to use ndctl or PowerShell to do the management, which is covered in parts 2 and 3 of this Quick Start Guide.

# ipmctl delete –goal

Delete allocation goal from DIMM 0x0001: Success

Delete allocation goal from DIMM 0x0011: Success

Delete allocation goal from DIMM 0x0021: Success

...

Delete allocation goal from DIMM 0x1101: Success

Delete allocation goal from DIMM 0x1111: Success

Delete allocation goal from DIMM 0x1211: Success

Dump Current Goal

# ipmctl dump -destination testfile -system -config

Successfully dumped system configuration to file: testfile

Create a Goal from a Configuration File

Goals can be provisioned using a configuration file with the load -source <file> -goal command. To save the current configuration to a file, use the dump -destination <file> -System –config command. This allows for the same configuration to be applied to multiple systems or restored to the same system.

# ipmctl dump -destination testfile -system -config

# ipmctl load -source myPath/testfile –goal

Show Current Goal

To see the current goal, if there is one, use the show goal command.

# ipmctl show -goal

Confirm Mode Change

So far, we have seen how the goal can be set for different modes. Upon reboot, run the following commands to see if the model is applied correctly. The current output shows that the goal was set to configure the full capacity in Memory mode.

# ipmctl show –memoryresources

Capacity=6031.2 GiB

MemoryCapacity=6024.0 GiB

AppDirectCapacity=0.0 GiB

UnconfiguredCapacity=0.0 GiB

InaccessibleCapacity=7.2 GiB

ReservedCapacity=0.0 GiB

Show Regions

If the mode is changed from Memory Mode to App Direct Mode, a single region per socket is created upon reboot. If the Memory Mode is changed from App Direct Mode to Memory Mode, there are no regions created. Use the following command to see the regions created:

# ipmctl show -region

SocketID| ISetID | PersistentMemoryType | Capacity | FreeCapacity | HealthState

=====================================================================

0x0000 | 0xa0927f48a8112ccc | AppDirect | 3012.0 GiB | 3012.0 GiB | Healthy

0x0001 | 0xf6a27f48de212ccc | AppDirect | 3012.0 GiB | 3012.0 GiB | Healthy

Appendix D: Sector Mode PMem Disk (storage over App Direct Mode)

For old file systems or applications that require write sector atomicity, create a PMem Disk in sector mode.

PMem-based storage can perform I/O at byte, or more accurately, cache line granularity. However, exposing such storage as a traditional block device does not guarantee atomicity and requires the block drivers for PMem to provide this support.

Traditional SSDs typically provide protection against torn sectors in hardware to complete in-flight block writes. Whereas, if a PMem write is in progress and a power failure happens, the block may contain a mix of old and new data. Existing applications may not be prepared to handle such a scenario since they are unlikely to protect against torn sectors or metadata.

The Block Translation Table (BTT) provides the atomic sector update semantics for PMem devices so that applications that rely on sector writes not being torn can continue to do so.

As shown in the diagram below, the BTT manifests itself as a stacked block device and reserves a portion of its metadata's underlying storage. At the heart of it is an indirection table that re-maps all of the blocks on the volume. It can be viewed as a straightforward file system that only provides atomic sector updates. The DAX feature is not supported in this mode.