Jump-start AI Development

Our extensive library of sample code and pretrained models provides a foundation that enables you to develop robust AI applications quickly and efficiently.

Build Faster

Use optimized software stacks and open source tools alongside our high-performance CPUs, GPUs, and NPUs . Together, they provide seamless integration, performance tuning, and faster training and inference for your AI workloads.

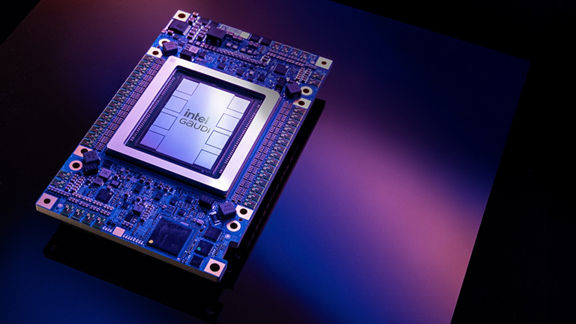

Optimize Your Path to Production

Develop and optimize AI models and applications for enterprise solutions, running training and inference workloads of any scale with top-tier price performance. Test the new Intel® Gaudi® 3 AI accelerator to push the limits of large-model training in the cloud.

Deploy Powerful Apps Anywhere

Get the most out of your AI projects with Intel's AI PC. Discover tools, workflows, frameworks, and developer kits that include the latest Intel® hardware featuring the Intel® Core™ Ultra processor.

Connect and Collaborate

Get Early Access

Be among the first to take advantage of exclusive features and content, including Intel’s cutting-edge development tools, libraries, and software frameworks. Get notified of releases of guides, tutorials, and technical documentation.

Intel Innovation 2024

Engage with top AI industry leaders, technologists,

and pioneering startup entrepreneurs. Watch the

on-demand innovation selects.

Get Help

Find assistance and get advice from Intel engineers, AI experts, and our vibrant developer community.