Overview

The Simple Autonomous Wheeled Robot (SAWR) project defines the hardware and software required for a basic "example" robot capable of autonomous navigation using the Robot Operating System* (ROS*) and an Intel® RealSense™ camera. In this article, we give an overview of the SAWR project and also offer some tips for building your own robot using the Intel RealSense camera and SAWR projects.

Mobile Robots – What They Need

Mobile robots require the following capabilities:

- Sense a potentially dynamic environment. The environment surrounding robots is not static. Obstacles, such as furniture, humans, or pets, are sometimes moving, and can appear or disappear.

- Determine current location. For example, imagine that you are driving a car. You need to specify "Where am I?" in the map or at least know your position relative to a destination position.

- Navigate from one location to another. For example, to drive your car to your destination, you need both driver (deciding on how much power to apply and how to steer) and navigator (keeping track of the map and planning a route to the destination) skills.

- Interact with humans as needed. Robots in human environments need to be able to interact appropriately with humans. This may mean the ability to recognize an object as a human, follow him or her, and respond to voice or gesture commands.

The SAWR project, based on ROS and the Intel RealSense camera, covers the first three of these requirements. It can also serve as a platform to explore how to satisfy the last requirement: human interaction.

A Typical Robot Software Stack

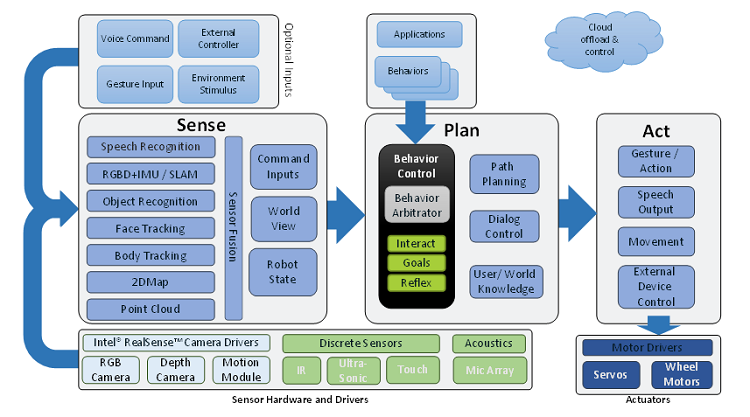

To fulfill the above requirements, a typical robot software stack consists of many modules (see Figure 1). At the bottom of the stack, sensor hardware drivers, including those for the Intel RealSense camera in the case of the SAWR, deliver environmental information to a set of sensing modules. These modules recognize environmental information as well as human interaction. Several sources of information are fused to create various models: a world model, an estimate of the robot state (including position in the world), and command inputs (for example, voice recognition).

The Plan module decides how the robot will act in order to achieve a goal. For mobile robotics, the main purpose is navigating from one place to another, for which it is necessary to calculate obstacle-free paths given the current world model and state.

Based on the calculated plan, the Act module manages the actual movement of the robot. Typically, motor control is the main function of this segment, but other actions are possible, such as speech output. When carrying out an action, a robot may also be continuously updating its world model and replanning. For example, if an unexpected obstacle arises, the robot may have to update its model of the world and also replan its path. The robot may even make mistakes (for example, its estimate of its position in the world might be incorrect), in which case it has to figure out how to recover.

Autonomous navigation requires a lot of computation to do the above tasks. Some tasks can be offloaded to the cloud, but due to connectivity and latency issues this is frequently not an option. The SAWR robot can do autonomous navigation using only onboard computational resources, but the cloud can still be useful for adding other capabilities, such as voice control (for example, using Amazon Voice Services*).

Figure 1. A typical robot software stack.

Navigation Capabilities - SLAM

Simultaneous localization and mapping (SLAM) is one of the most vital capabilities for autonomous mobile robots. In a typical implementation, the robot navigates (plans paths) through a space using an occupancy map. This map needs to be dynamically updated as the environment changes. In lower-end systems, this map is typically 2D, but more advanced systems might use a 3D representation such as a point cloud. This map is part of the robot’s world representation. The “localization” part of SLAM means that in addition to maintaining the map, the robot needs to estimate where it is located in the map. Normally this estimation uses a probabilistic method; rather than a single estimated location, the robot maintains a probability distribution and the most probable location is used for planning. This allows the robot to recover from errors and reason about uncertainty. For example, if the estimate for the current location is too uncertain, the robot could choose to acquire more information from the environment (for example, by rotating to scan for landmarks) to refine its estimate.

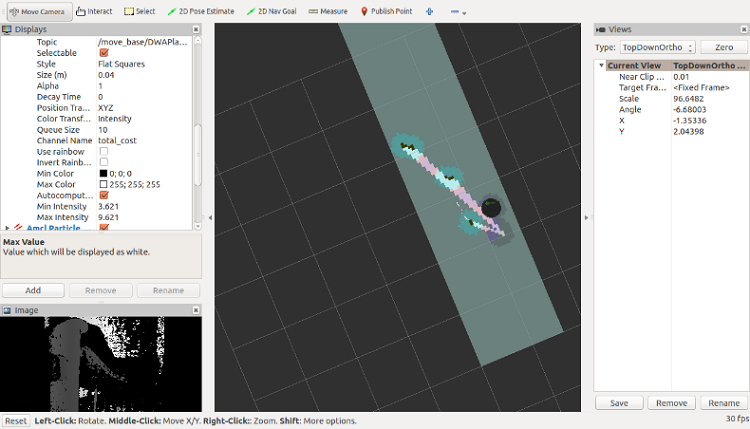

In the default SAWR software stack, the open source slam_gmapping package is used to create and manage the map, although there are several other options available, such as cartographer and rgbd-slam. This module is continually integrating new sensor data into the map and clearing out old data if it is proven incorrect. Another module, amcl, is used to estimate the current location by matching sensor data against the map. These modules run in parallel to constantly update the map and the estimate of the robot’s position. Figure 2 shows a typical indoor environment and a 2D map created by this process.

Figure 2. Simultaneous localization and mapping (SLAM) with 2D mapping.

Hardware for Robotics

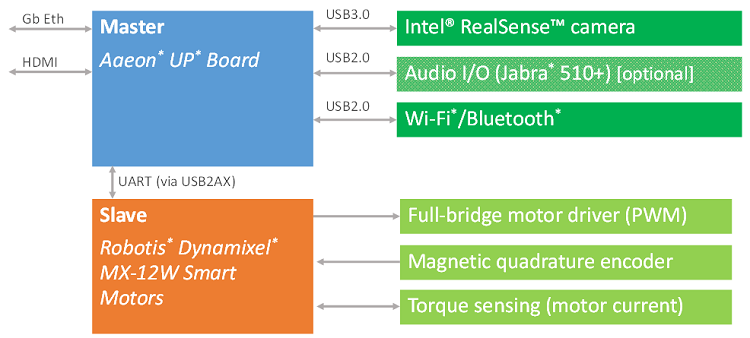

Figure 3 shows the hardware architecture of the SAWR project. Like many robotics systems, the architecture consists of a master and slave system. The master takes care of high-level processing (such as SLAM and planning), and the slave takes care of real-time processing (such as motor speed control). This is similar to how the brain and spinal reflexes work together in animals. Several different options can be used for this model, but typically a Linux* system is used for the master and one or more microcontroller units (MCUs) are used for the slave.

Figure 3. Robot architecture.

In this article, Intel RealSense cameras are used as the primary environmental sensor. These cameras provide depth data and can be used as input to a SLAM system. The Intel® RealSense™ camera R200 or Intel® RealSense™ camera ZR300 are used in the current SAWR project. The Intel® RealSense™ camera D400 series, shown in Figure 4, will soon become a common depth camera of choice, but since this camera provides similar data but with improved range and accuracy, and uses the same driver, an upgrade is straightforward. As for drivers, librealsense and realsense_ros_camera drivers are available on GitHub*. You can use any Intel RealSense camera with them.

Figure 4. Intel® RealSense™ Depth Camera D400 Series.

SAWR Basic Mobile Robot

The following is a spec overview of the SAWR basic mobile robot, shown in Figure 6, which is meant to be an inexpensive reference design that is easy to reproduce (the GitHub site includes the files to laser-cut your own frame). The SAWR software stack can be easily adapted to other robot frames. For this design, the slave computers are actually embedded inside the Dynamixel servos. The MCUs in these smart motors take care of low-level issues like position sensing and speed control, making the rest of the robot much simpler.

Computer: Aaeon UP board

Camera: Intel RealSense camera

Actuation: Two Dynamixel MX-12W* smart servos with magnetic encoders

Software: Xubuntu* 16.04 and ROS Kinetic*

Frame: Laser-cut acrylic or POM, Polulo sphere casters, O-ring tires and belt transmission

Other: DFRobot 25W/5V power regulator

Extras: Jabra Speak* 510+ USB speakerphone (for voice I/O, if desired)

Instructions and software: https://github.com/01org/sawr

Figure 6. SAWR basic mobile robot.

One of distinctive parts of the SAWR project is that both the hardware and the software have been developed in an open source style. The software is based on modifying and simplifying the Open Source Robotics Foundation Turtlebot* stack, but adds a custom motor driver using the Dynamixel Linux* SDK. For the hardware, the frame is parametrically modeled using OpenSCAD*, and then converted to laser-cut files using Inkscape*. You can download all the data from GitHub, and then make your own frame using a laser cutter (or a laser-cutter service). Most of other parts are available from a hardware store. Detailed instructions, assembly, and setup plans are available online.

Using an OEM Board for Robotics

When you choose an OEM board for robotics, such as an UP board for SAWR or any other robotics system, using active cooling to get higher performance is strongly recommended. Usually robotics middleware consumes a high level of CPU resources, and lack of CPU resource sometimes will translate into low quality or low speed of autonomous movement. With active cooling, you can maintain the CPU’s highest speed indefinitely. In particular, the UP board can turbo with active cooling and run at a much higher clock rate with it than without.

You may be concerned about power resources for active cooling and higher clock rates. However power consumption is not usually a limiting factor in robotics, because motors are usually the primary power load. In fact, instead of the basic UP board, you can select the UP Squared*, which has much better performance.

Another issue is memory. The absolute minimum is 2 GB, but 4 GB is highly recommended. The SLAM system uses a lot of memory to maintain the world state and position estimate. Remember that the OS needs memory too, and Ubuntu tends to use about 500 MB doing nothing. So a 4 GB system has 7x the available space for applications than a 1 GB system, not just 4x.

ROS Overview

Despite its name, ROS is not an OS, but a middleware software stack that can run on top of various operating systems, although it is primarily used with Ubuntu. ROS supports a distributed, concurrent processing model based on a graph of communicating nodes. Thanks to this basic architecture, you can not only easily network together multiple processing boards on the same robot if you need to, but you can also physically locate boards away from the actual robot by using Wi-Fi* (with some loss of performance and reliability, however). From a knowledge base perspective, ROS has a large community with many existing open source nodes supporting a wide range of sensors, actuators, and algorithms. That and its excellent documentation are good reasons to choose ROS. From a development and debugging perspective, various powerful and attractive visualization tools and simulators are also available and useful.

Basic ROS Concepts

This section covers the primary characteristics of the ROS architecture. To learn more, refer to the ROS documentation and tutorials.

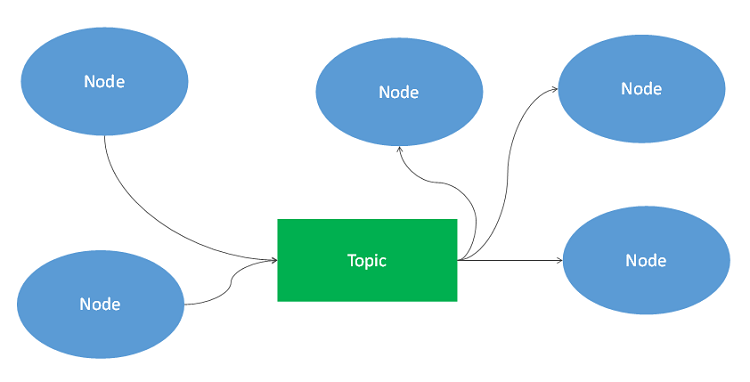

- Messages and topics (see Figure 7). ROS uses a publish and subscribe system for sending and receiving data on uniquely named topics. Each topic can have multiple publishers and subscribers. Messages are typed and can carry multiple elements. Message delivery is asynchronous, and it's usually recommended to use this for most interprocess communication in ROS.

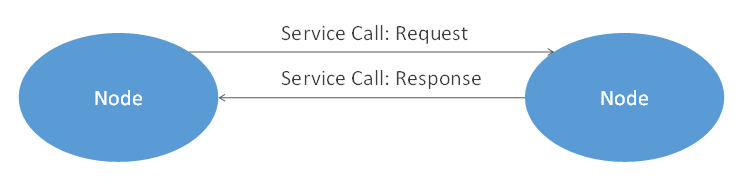

Figure 7. Messages and topics. - Service calls (see Figure 8). Service calls use synchronous remote procedure call semantics, also known as “request/response.” When using service calls, the caller blocks communication until a response is received. Due to this behavior, which can lead to various problems such as deadlocks and hung processes, you should consider whether you really need to build your communication with service calls. They are primarily used for updating parameters, where the buffering for messages creates too much overhead (for example, for updating maps) or where synchronization between activities is actually needed.

Figure 8. Service calls. - Actions (see Figure 9). Actions are used to define long-running tasks with goals, the possibility of failure, and where periodic status reports are useful. In the SAWR software stack actions are mainly used for setting the destination goal and monitoring the progress of navigation tasks. Actions generally support asynchronous goal-directed behavior control based on a standard set of topics. In the case of SAWR, you can trigger a navigation action by using Rviz (the visualizer) and the 2D Nav Goal button.

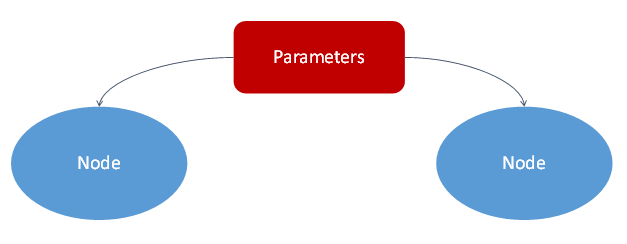

Figure 9. Actions. - Parameters (see Figure 10). Parameters are used to set various values for each node. A parameter server provides typed constant data at startup, and the latest version of ROS also supports dynamic parameter update after node launch. Parameters can be specified in various ways, including through the command line, parameter files, or launch file parameters.

Figure 10. Parameters. - Other ROS concepts. There are several other important concepts relevant to the ROS architecture.

- Packages: Collections of files used to implement or specify a service or node in ROS, built together using the catkin build system (typically).

- Universal Robot Description Format (URDF): XML files describing joints and transformations between joints in a 3D model of the robot.

- Launch files: XML files describing a set of nodes and parameters for a ROS graph.

- Yet Another Markup Language: Used for parameter specification on the command line and in files.

ROS Tools

A lot of powerful development and debug tools are available for ROS. The following tools are typically used for autonomous mobile robots.

- Rviz (see Figure 11). Visualize various forms of dynamic 3D data in context: transforms, maps, point clouds, images, goal positions, and so on.

Figure 11. Rviz. - Gazebo. Robot simulator, including collisions, inertia, perceptual errors, and so on.

- Rqt. Visualize graphs of nodes and topics.

- Command-line tools. Listen to and publish on topics, make service calls, initiate actions. Can filter and monitor error messages.

- Catkin. Build system and package management.

ROS Common Modules for Autonomous Movement

The following modules are commonly used for autonomous mobile robots, and SAWR also adopts them as well.

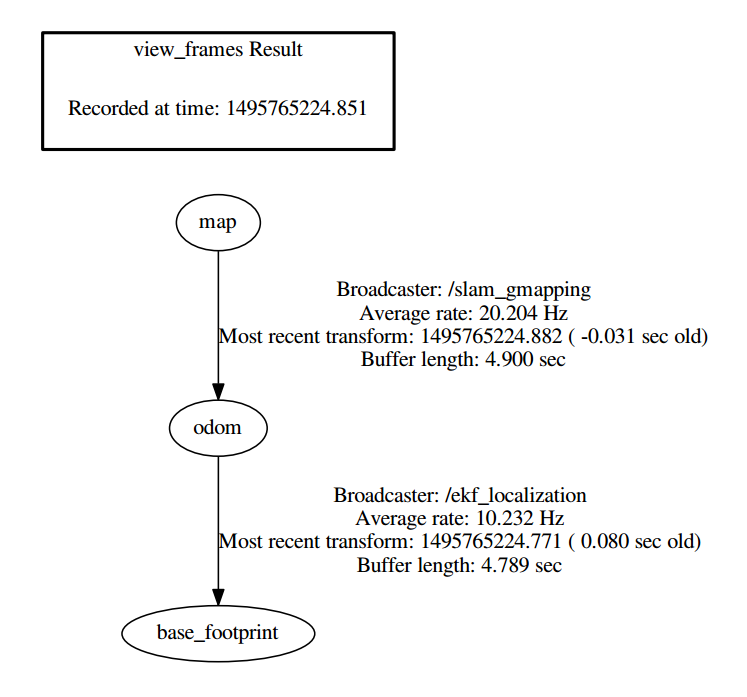

- Tf (tf2) (see Figure 12). Coordinate the transform library. It's one of the most important packages for ROS. Thanks to tf, you can manage all coordinate values, including the position of the robot or relations between the camera and wheels. For treating various categories of coordinates, several distinctive concepts such as frame and tree are adopted.

Figure 12. tf frame example. - slam_gmapping. ROS wrapper for OpenSlam's Gmapping. gmapping is one of the most famous SLAM algorithms. While still popular, there are also several alternatives now for this function.

- move_base. Core module for autonomous navigation. This package provides various functions, including planning a route, maintaining cost maps, and issuing speed and direction commands for motors.

- Robot_state_publisher. Publishes the 3D poses of the robot links, which are important for a manipulator or humanoid. In the case of SAWR, the most important data maintained by this module is the position and orientation of the robot and the location of the camera relative to the robot’s position.

Tips for Building a Custom Robot using the SAWR Stack

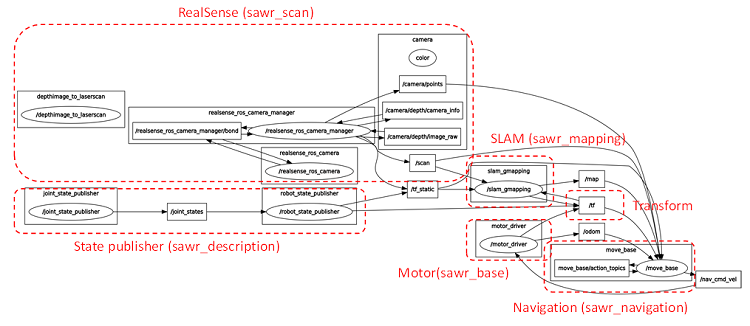

SAWR consists of the following subdirectories, which you can use as-is if you want to utilize the complete SAWR software and hardware package (see Figure 13). You can also use them as a starting point for your original robot with the Intel RealSense camera. Also below are tips for customizing the SAWR stack for use with other robot hardware.

- sawr_master: Master package, launch scripts.

- Modify if you change another ROS module.

- sawr_description: Runtime physical description (URDF files).

- Modify urdf and xacro files according to your robot’s dimension (check with tf tree/frame).

- sawr_base: Motor controller and hardware interfacing.

- Prepare your own motor controller and odometry libraries.

- sawr_scan: Camera configuration.

- You can use as-is if you use the Intel RealSense camera R200 or ZR300. If you use the Intel RealSense camera D400 series, use ROS Wrapper 2.0 for Intel® RealSense™ Devices.

- sawr_mapping: SLAM configuration.

- You can begin as-is if you use the same Intel RealSense camera configuration with SAWR.

- sawr_navigation: Move-base configuration.

- Modify and tune parameters of global/local costmap, move_base. This is the most difficult part of tuning your own hardware.

Figure 13. SAWR ROS node graph viewed by rqt_graph.

Conclusion

Autonomous mobile robotics is an emerging area, but the technology for mobile robotics is already relatively mature. ROS is a key framework for robot software development that provides a wide range of modules covering many areas of robotics. The latest version is Lunar—the 12th generation.. Robotics involves all aspects of computer science and engineering, including artificial intelligence, computer vision, machine learning, speech understanding, the Internet of Things, networking, and real-time control—and SAWR project is good start point for developing ROS* based robotics.

About the Author

Sakemoto is an application engineer in the Intel® Software and Services Group. He is responsible for software enabling and also works with application vendors in the area of embedded systems and robotics. Prior to his current job, he was a software engineer for various mobile devices including embedded Linux and Windows*.