Introduction

This tutorial explores the use of deep learning models for face detection, age, gender, and emotion recognition, and head pose estimation included in versions of the Intel® Distribution of OpenVINO™ toolkit. It also demonstrates the use of architectural components of the Intel Distribution of OpenVINO toolkit, such as the Intel® Deep Learning Deployment Toolkit, which enables software developers to deploy pretrained models in user applications with a high-level C++ library, referred to as the Inference Engine.

The tutorial includes instructions for building and running a sample application with pretrained recognition models on the UP Squared* Grove* IoT Development Kit. The UP Squared* platform comes preinstalled with an Ubuntu* 16.04.4 Desktop image and the Intel Distribution of OpenVINO toolkit.

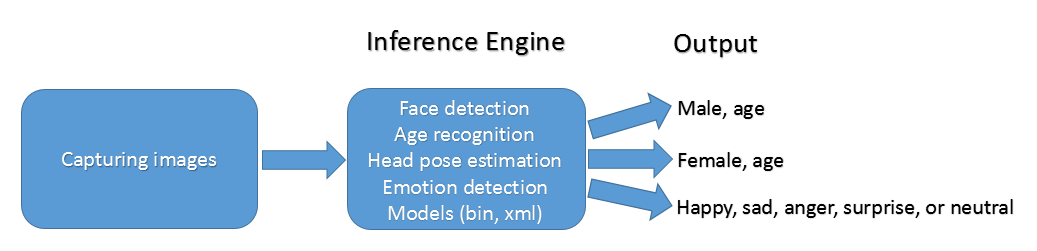

Figure 1 illustrates the relationship of captured images to the Inference Engine and detection results.

Figure 1. Age, gender or emotion recognition flow

Requirements

Table 1. Requirements

| Hardware | Software |

|---|---|

| UP Squared* Grove* IoT Development Kit | Pre-installed Ubuntu* 16.04.4 Desktop image |

| A web camera with USB Port | Intel Distribution of OpenVINO toolkit (pre-installed on UP Squared platform). This tutorial uses both the pre-installed version the R3 Version 3.343. To upgrade or refresh your version, see Free Download. |

| A monitor with a HDMI interface and cable | |

| USB keyboard and mouse | |

| A network connection with Internet access or Wi-Fi Kit for UP Squared* |

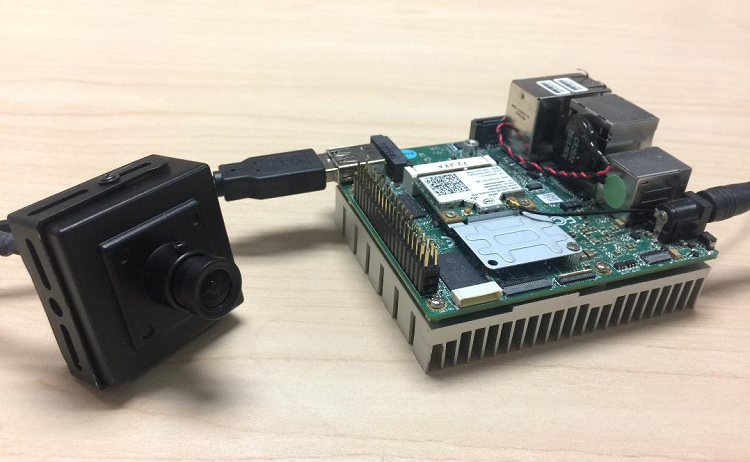

Figure 2. UP Squared with web camera

Run the Pre-installed Age and Gender Recognition Model

An Ubuntu* 16.04.4 Desktop image along with the Intel Distribution of OpenVINO toolkit come pre-installed on the UP Squared* board. Table 2 describes the directory structure of the installation.

Table 2. Directories and key files in the Intel Distribution of OpenVINO Toolkit

| Component | Location |

|---|---|

| Root directory | /opt/intel/computer_vision_sdk/deployment_tools |

| Intel models | /opt/intel/computer_vision_sdk/deployment_tools/intel_models |

| setupvars.sh | /opt/intel/computer_vision_sdk/bin/setupvars.sh |

| Build | /opt/intel/computer_vision_sdk/deployment_tools/inference_engine/samples/build |

| Binary | /opt/intel/computer_vision_sdk/deployment_tools/inference_engine/samples/build/intel64/Release |

| Demo scripts | /opt/intel/computer_vision_sdk/deployment_tools/demo contain classification and security barrier camera demo scripts. |

Build the age and gender recognition application

-

Update the repository list and install prerequisite packages.

# Update the repository list sudo -E apt update # Install prerequisite packages sudo -E apt -y install cmake libpng12-dev libcairo2-dev libpango1.0-dev libglib2.0-dev libgtk2.0-dev libgstreamer0.10-dev libswscale-dev libavcodec-dev libavformat-dev -

Set environment variables.

export ROOT_DIR=/opt/intel/computer_vision_sdk_2018.3.343/deployment_tools source $ROOT_DIR/../bin/setupvars.sh -

If the build directory exits, go to the build directory. Otherwise, create the build directory.

cd $ROOT_DIR/inference_engine/samples sudo mkdir build cd $ROOT_DIR/inference_engine/samples/build -

Generate make file for release, without debug information.

sudo cmake -DMAKE_BUILD_TYPE=Release .. -

Generate make file with debug information.

sudo cmake -DMAKE_BUILD_TYPE=Debug .. -

Build the interactive face detection sample.

sudo make -j8 interactive_face_detection_sample -

Build all samples.

sudo make -j8

-

The build generates the interactive_face_detection executable in the Release or Debug directory.

ls $ROOT_DIR/deployment_tools /inference_engine/samples/build/intel64/Release/interactive_face_detection_sample

Run the age and gender recognition application

Run the application with -h to display all available options.

cd $ROOT_DIR/inference_engine/samples/build/intel64/Release$ ./interactive_face_detection_sample -h

InferenceEngine:

API version ............ 1.0

Build .................. 10478

interactive_face_detection [OPTION]

Options:

-h Print a usage message.

-i "<path>" Optional. Path to an video file. Default value is "cam" to work with camera.

-m "<path>" Required. Path to an .xml file with a trained face detection model.

-m_ag "<path>" Optional. Path to an .xml file with a trained age gender model.

-m_hp "<path>" Optional. Path to an .xml file with a trained head pose model.

-l "<absolute_path>" Required for MKLDNN (CPU)-targeted custom layers.Absolute path to a shared library with the kernels impl.

Or

-c "<absolute_path>" Required for clDNN (GPU)-targeted custom kernels.Absolute path to the xml file with the kernels desc.

-d "<device>" Specify the target device for Face Detection (CPU, GPU, FPGA, or MYRYAD. Sample will look for a suitable plugin for device specified.

-d_ag "<device>" Specify the target device for Age Gender Detection (CPU, GPU, FPGA, or MYRYAD. Sample will look for a suitable plugin for device specified.

-d_hp "<device>" Specify the target device for Head Pose Detection (CPU, GPU, FPGA, or MYRYAD. Sample will look for a suitable plugin for device specified.

-n_ag "<num>" Specify number of maximum simultaneously processed faces for Age Gender Detection ( default is 16).

-n_hp "<num>" Specify number of maximum simultaneously processed faces for Head Pose Detection ( default is 16).

-no_wait No wait for key press in the end.

-no_show No show processed video.

-pc Enables per-layer performance report.

-r Inference results as raw values.

-t Probability threshold for detections.

The interactive face detection application uses three models:

- Path to an .xml file with a trained face detection model

- Path to an .xml file with a trained age gender model

- Path to an .xml file with a trained head pose model

The application supports two options for -i, web camera streaming or the path to a video file.

Run the application with a web camera

-

Plug the web camera into a USB port of the UP Squared board. Open an Ubuntu console and list the video devices.

ls -ltrh /dev/video* -

If there aren’t any /dev/video files on the system, ensure that the web camera is plugged into USB.

crw-rw----+ 1 root video 81, 0 Sep 27 12:48 /dev/video0 -

Run the interactive face detection application with the camera.

./interactive_face_detection_sample -d CPU -d_ag CPU -d_hp CPU -i cam -m "$ROOT_DIR/intel_models/face-detection-adas-0001/FP32/face-detection-adas-0001.xml" -m_ag "$ROOT_DIR/intel_models/age-gender-recognition-retail-0013/FP32/age-gender-recognition-retail-0013.xml" -m_hp "$ROOT_DIR/intel_models/head-pose-estimation-adas-0001/FP32/head-pose-estimation-adas-0001.xml"

Run the application with a path to a video file

The application accepts pre-recorded video files. This example uses a pre-recorded video.

./interactive_face_detection_sample -d CPU -d_ag CPU -d_hp CPU -i /home/upsquared/head-pose-face-detection-female-and-male.mp4 -m "$ROOT_DIR/intel_models/face-detection-adas-0001/FP32/face-detection-adas-0001.xml" -m_ag "$ROOT_DIR/intel_models/age-gender-recognition-retail-0013/FP32/age-gender-recognition-retail-0013.xml" -m_hp "$ROOT_DIR/intel_models/head-pose-estimation-adas-0001/FP32/head-pose-estimation-adas-0001.xml"

Interpret detection results

The application uses OpenCV* to display the visual results for either web camera or pre-recorded video. Visual results include:

- A bounding box around each detected face

- A multi-colored axis tracking the center of the bounding box

- Text on the upper left corner of the bounding box indicating gender and estimating age

- Time to render the face detection, and age and gender detection, and head pose

Figure 3. Age gender recognition results

Floating point error

The CPU can only run models with floating point precision 32 (FP32).

ERROR: The plugin does not support F16

If an error occurs, change the path of the models to point to the FP32.

Run demo scripts

The classification and security barrier camera demo scripts are located in /opt/intel/computer_vision_sdk/deployment_tools/demo. Refer to README.txt for instructions detailing how to use the demo scripts and create your own age gender and emotion demo script.

Run the Age, Gender, and Emotion Recognition Model in the Intel Distribution of OpenVINO Toolkit R3

Download the Intel Distribution of OpenVINO Toolkit R3. Follow the installation instructions for Linux*. The installation creates the directory structure in Table 3.

Table 3. Directories and Key Files in the Intel Distribution of the OpenVINO Toolkit R3

| Component | Location |

|---|---|

| Root directory | /opt/intel/computer_vision_sdk_2018.3.343/deployment_tools |

| Intel models | /opt/intel/ computer_vision_sdk_2018.3.343/deployment_tools/intel_models |

| setupvars.sh | /opt/intel/ computer_vision_sdk_2018.3.343/bin/setupvars.sh |

| Build | /home/upsquared/inference_engine_samples |

| Binary | /home/upsquared/inference_engine_samples/intel64/Release |

| Demo scripts | /opt/intel/ computer_vision_sdk_2018.3.343/deployment_tools/demo |

Build the Intel age, gender, and emotion recognition application

-

Update the repository list and install prerequisite packages.

# Update the repository list sudo -E apt update # Install prerequisite packages sudo -E apt -y install build-essential cmake libpng12-dev libcairo2-dev libpango1.0-dev libglib2.0-dev libgtk2.0-dev libswscale-dev libavcodec-dev libavformat-dev libgstreamer1.0-0 gstreamer1.0-plugins-base -

Set environment variables.

export ROOT_DIR=/opt/intel/computer_vision_sdk_2018.3.343/deployment_tools source $ROOT_DIR/../bin/setupvars.sh -

If the build directory exits, go to the build directory. Otherwise, create the build directory.

cd $ROOT_DIR/inference_engine/samples sudo mkdir build cd $ROOT_DIR/inference_engine/samples/build -

Generate make file for release, without debug information.

sudo cmake -DMAKE_BUILD_TYPE=Release .. -

Generate make file with debug information.

sudo cmake -DMAKE_BUILD_TYPE=Debug .. -

Build the interactive face detection sample.

sudo make -j8 interactive_face_detection_sample -

Build all samples.

sudo make -j8 -

The build generates the interactive_face_detection executable in the Release or Debug directory.

ls $ROOT_DIR/deployment_tools /inference_engine/samples/build/intel64/Release/interactive_face_detection_sample

Run the Intel age, gender, and emotion recognition application

Run the application with -h to display all available options.

cd ~/inference_engine_samples/intel64/Release$ ./interactive_face_detection_sample -h

InferenceEngine:

API version ............ 1.2

Build .................. 13911

interactive_face_detection [OPTION]

Options:

-h Print a usage message.

-i "<path>" Optional. Path to an video file. Default value is "cam" to work with camera.

-m "<path>" Required. Path to an .xml file with a trained face detection model.

-m_ag "<path>" Optional. Path to an .xml file with a trained age gender model.

-m_hp "<path>" Optional. Path to an .xml file with a trained head pose model.

-m_em "<path>" Optional. Path to an .xml file with a trained emotions model.

-l "<absolute_path>" Required for MKLDNN (CPU)-targeted custom layers.Absolute path to a shared library with the kernels impl.

Or

-c "<absolute_path>" Required for clDNN (GPU)-targeted custom kernels.Absolute path to the xml file with the kernels desc.

-d "<device>" Specify the target device for Face Detection (CPU, GPU, FPGA, or MYRIAD). Sample will look for a suitable plugin for device specified.

-d_ag "<device>" Specify the target device for Age Gender Detection (CPU, GPU, FPGA, or MYRIAD). Sample will look for a suitable plugin for device specified.

-d_hp "<device>" Specify the target device for Head Pose Detection (CPU, GPU, FPGA, or MYRIAD). Sample will look for a suitable plugin for device specified.

-d_em "<device>" Specify the target device for Emotions Detection (CPU, GPU, FPGA, or MYRIAD). Sample will look for a suitable plugin for device specified.

-n_ag "<num>" Specify number of maximum simultaneously processed faces for Age Gender Detection (default is 16).

-n_hp "<num>" Specify number of maximum simultaneously processed faces for Head Pose Detection (default is 16).

-n_em "<num>" Specify number of maximum simultaneously processed faces for Emotions Detection (default is 16).

-dyn_ag Enable dynamic batch size for AgeGender net.

-dyn_hp Enable dynamic batch size for HeadPose net.

-dyn_em Enable dynamic batch size for Emotions net.

-async Enable asynchronous mode

-no_wait No wait for key press in the end.

-no_show No show processed video.

-pc Enables per-layer performance report.

-r Inference results as raw values.

-t Probability threshold for detections.The interactive face detection application will use three models:

The interactive face detection application uses three models:

- Path to an .xml file with a trained face detection model

- Path to an .xml file with a trained age and gender model

- Path to an .xml file with a trained emotion recognition model

The application has two options for -i, web camera or the path to the video file.

Run the application with a web camera

-

Plug the web camera into USB of the UP Squared board, open an Ubuntu console and list the video devices.

ls -ltrh /dev/video* -

If there aren’t any /dev/video files on the system, ensure that the web camera is plugged into USB.

crw-rw----+ 1 root video 81, 0 Sep 27 12:48 /dev/video0 -

Run the emotion recognition application with the camera.

./interactive_face_detection_sample -d CPU -d_em CPU -dyn_em -i cam -m "$ROOT_DIR/intel_models/face-detection-adas-0001/FP32/face-detection-adas-0001.xml" -m_ag "$ROOT_DIR/intel_models/age-gender-recognition-retail-0013/FP32/age-gender-recognition-retail-0013.xml" -m_em "$ROOT_DIR/intel_models/emotions-recognition-retail-0003/FP32/emotions-recognition-retail-0003.xml"

Run the application with a path to a video file

The application accepts pre-recorded video files. This example uses a pre-recorded video.

./interactive_face_detection_sample -d CPU -d_em CPU -dyn_em -i /home/upsquared/head-pose-face-detection-female.mp4 -m "$ROOT_DIR/intel_models/face-detection-adas-0001/FP32/face-detection-adas-0001.xml" -m_ag "$ROOT_DIR/intel_models/age-gender-recognition-retail-0013/FP32/age-gender-recognition-retail-0013.xml" -m_em "$ROOT_DIR/intel_models/emotions-recognition-retail-0003/FP32/emotions-recognition-retail-0003.xml"

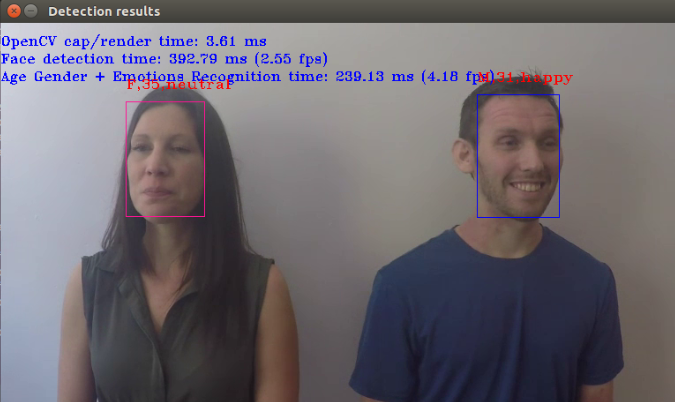

Interpret detection results

The application uses OpenCV* to display the visual results for either web camera or pre-recorded video. Visual results include:

- A bounding box around each detected face

- Text on the top of the bounding box indicates gender, age, and emotion information for each detected face such as neutral, happy, sad, surprised or angry

- Times to render the images, face, age gender and emotion

Figure 4. Emotion recognition results

Floating point error

The CPU can only run models with floating point precision 32 (FP32).

ERROR: The plugin does not support F16

If an error occurs, change the path of the models to point to the FP32.

Run Intel demo script

The classification and security barrier camera demo scripts are located in /opt/intel/ computer_vision_sdk_2018.3.343/deployment_tools/demo. Refer to README.txt for instructions detailing how to use the demo scripts and create your own age gender and emotion demo script.

Summary

This tutorial describes how to run the age gender and emotion recognition Intel models that use Inference Engine models. Try other pretrained models available in /opt/intel/ computer_vision_sdk_2018.3.343/deployment_tools/intel_models on the UP Squared* board.

Key References

- Intel® Developer Zone (Intel® DZ)

- UP Squared*

- Intel® Distribution of OpenVINO™ toolkit

- Intel Distribution of OpenVINO toolkit Release Notes

- Intel Distribution of OpenVINO toolkit Forum

- Inference Engine

About the Author

Nancy Le is a software engineer at Intel Corporation in the Core & Visual Computing Group working on Intel Atom® processor enabling for Internet of Things or IoT projects.