Improve Your Application Performance by Changing Communications

Improve the performance of the MPI application by changing blocking to non-blocking communications.

In your code replace the serial MPI_Sendrcv with non-blocking communication: MPI_Isend and MPI_Irecv. For example:

Original code snippet:

// boundary exchange void exchange(para* p, grid* gr) { int i,j; MPI_Status status_100, status_200, status_300, status_400; // send down first row MPI_Send(gr->x_new[1], gr->lcol+2, MPI_DOUBLE, gr->down, 100, MPI_COMM_WORLD); MPI_Recv(gr->x_new[gr->lrow+1], gr->lcol+2, MPI_DOUBLE, gr->up, 100, MPI_COMM_WORLD, &status_100); // send up last row MPI_Send(gr->x_new[gr->lrow], gr->lcol+2, MPI_DOUBLE, gr->up, 200, MPI_COMM_WORLD); MPI_Recv(gr->x_new[0], gr->lcol+2, MPI_DOUBLE, gr->down, 200, MPI_COMM_WORLD, &status_200); // copy left column to tmp arrays if(gr->left != MPI_PROC_NULL) { for(i=0; i< gr->lrow+2; i++) { left_col[i] = gr->x_new[i][1]; } MPI_Send(left_col, gr->lrow+2, MPI_DOUBLE, gr->left, 300, MPI_COMM_WORLD); } if(gr->right != MPI_PROC_NULL) { MPI_Recv(right_col, gr->lrow+2, MPI_DOUBLE, gr->right, 300, MPI_COMM_WORLD, &status_300); // copy right column to tmp // copy received left column to ghost cells for(i=0; i< gr->lrow+2; i++) { gr->x_new[i][gr->lcol+1] = right_col[i]; right_col[i] = gr->x_new[i][gr->lcol]; } // send right MPI_Send(right_col, gr->lrow+2, MPI_DOUBLE, gr->right, 400, MPI_COMM_WORLD); } if(gr->left != MPI_PROC_NULL) { MPI_Recv(left_col, gr->lrow+2, MPI_DOUBLE, gr->left, 400, MPI_COMM_WORLD,&status_400); for(i=0; i< gr->lrow+2; i++) { gr->x_new[i][0] = left_col[i]; } } }Updated code snippet:

MPI_Request req[7]; // send down first row MPI_Isend(gr->x_new[1], gr->lcol+2, MPI_DOUBLE, gr->down, 100, MPI_COMM_WORLD, &req[0]); MPI_Irecv(gr->x_new[gr->lrow+1], gr->lcol+2, MPI_DOUBLE, gr->up, 100, MPI_COMM_WORLD, &req[1]); ..... MPI_Waitall(7, req, MPI_STATUSES_IGNORE);Once corrected, the single iteration of the revised application will look like the following example:

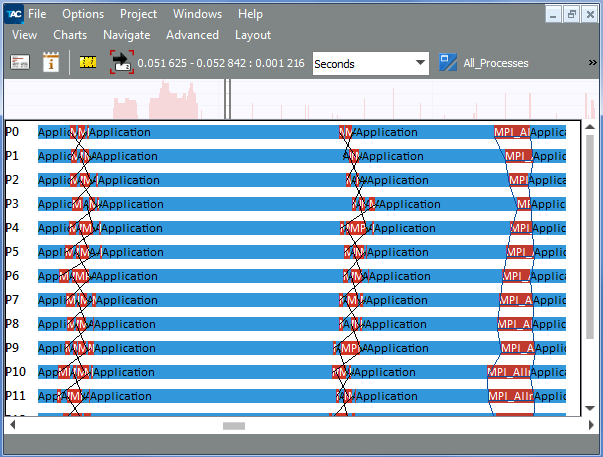

Use the Intel Trace Analyzer Comparison view to compare the serialized application with the revised one. Compare two traces with the help of the Comparison View, going to View > Compare. The Comparison View looks similar to:

In the Comparison View, you can see that using non-blocking communication helps to remove serialization and decrease the time of communication of processes.