Introduction

This paper discusses the new features and enhancements available in the third generation Intel® Xeon® processor Scalable family and how developers can take advantage of them. The latest processor and platform provide additional new technologies, including BFLOAT16 to help with deep learning training, the second generation of Intel® Optane™ DC persistent memory, Intel® Platform Firmware Resilience (Intel® PFR) and Intel® Speed Select Technology - Turbo Frequency (Intel® SST-TF).

The table below provides a comparison between the second and third generation of the Intel® Xeon® processor Scalable family. The third generation contains many of the same features as found in the second generation. New capabilities/changes relative to the previous generation are in Bolded Italic.

Table 1. The Next-Generation Intel® Xeon® Processor Scalable Family Microarchitecture Overview

| Second Generation Intel® Xeon® processor Scalable family with Intel® C620 series chipset | Third Generation Intel® Xeon® processor Scalable family with Intel® C620A series chipset | |

|---|---|---|

| Socket Count | 1, 2, 4, 8 | 4 and 8 glue less |

| Die Size | 14nm | |

| Processor Core Count | Up to 28 cores (56 cores with Intel® Hyper-Threading Technology (Intel® HT Technology)) per socket | Up to 28 cores (56 cores with Intel® Hyper-Threading Technology (Intel® HT Technology)) per socket |

| TDP | Up to 205W | Up to 250W |

| New Features | N/A | BFLOAT16, Intel® PFR, Intel® SST-TF on select skus, next generation of Intel® Virtual RAID on CPU (Intel® VROC), Converged Intel® Boot Guard and Intel® Trusted Execution Technology (Intel® TXT) |

| Socket Type | Socket P | Socket P+ |

| Memory DDR4 | Up to 6 channels DDR4 per CPU, up to 12 DIMMs per socket, up to 2666 MT/s 2DPC, up to 2933 MT/s 1DPC | Up to 6 channels DDR4 per CPU, up to 12 DIMMs per socket, up to 2933 MT/s 2DPC, up to 3200 MT/s 1DPC |

| Number of Intel® Ultra Path Interconnect (Intel® UPI) Interconnects | Up to 3 links per CPU | Up to 6 links per CPU |

| Intel® UPI Interconnect Speed | Up to 10.4 GT/s | |

| PCIe | PCIe Gen 3: up to 48 lanes per CPU (bifurcation support: x16, x8, x4) | PCIe Gen 3: up to 48 lanes per CPU (bifurcation support: x16, x8, x4) |

| Chipset Features | Intel® QuickAssist Technology (Intel® QAT) Enhanced Serial Peripheral Interface (eSPI) |

|

| Up to 20 ports PCIe 3.0 (8 GT/s) | ||

| Up to 14 SATA 3, up to 14 USB 2.0, up to 10 USB 3.0 | ||

Figure 1. Third Generation Intel® Xeon® processor Scalable family with Intel® C620A Series Chipset on a four-socket platform. There is an increase in the number of Intel® UPI pathways as compared to the previous generation, which can help with workloads that rely on remote memory accesses.

Intel® Optane™ persistent memory 200 series

The next generation of Intel® Optane™ persistent memory modules are a form of large capacity memory that can function in either a volatile or non-volatile state and provides a new tier positioned between DRAM and storage. Traditional memory in computer architecture is volatile. The content of volatile memory is only able to exist so long as the system supplies power. If there is a power loss, the data is immediately lost. Persistent memory maintains the integrity of the information stored in it, even when power has been lost or cycled on the system. The persistent memory is byte addressable, cache coherent, and provides software with direct access to persistence without paging.

The table below provides a memory comparison between the first and next generation of persistent memory. New capabilities/changes relative to the previous generation in Bolded Italic.

Table 2. Intel® Optane™ persistent memory overview

| Intel® Optane™ DC Persistent Memory Family | Intel® Optane™ persistent memory 100 series | Intel® Optane™ persistent memory 200 series |

|---|---|---|

| Supported Number of Sockets | 2, 4, and 8 sockets | 4 sockets |

| Number of Channels per Socket | Up to 6 | Up to 8 |

| DIMM Capacity | 128GB, 256GB, 512GB, 3 TB per socket | |

| Platform Capacity | Up to 3TB per socket | Up to 4TB per socket |

| DDR-T Speeds | Up to 2666 MT/sec | Up to 3200 MT/sec |

| TDP Power Sustained | 18W | 12-15W |

| Data Persistence in Power Failure Event | ADR | ADR, eADR (Optional) |

| Operating Modes | App Direct (AD), Memory Mode (MM), Mixed Mode (AD + MM) |

|

Enhanced - Asynchronous DRAM Refresh (eADR)

In the first generation of persistent memory a feature known as ADR (Asynchronous DRAM Refresh) causes a hardware interrupt on the memory controller that flushes the write protected data buffers. This feature helps to ensure that any data that can make it to the write pending queue (WPQ) on the memory controller will make it into persistent memory. This is done to protect data in the event of a power failure. ADR protects data only at the memory subsystem level it does not flush the processor caches. Cache flushes need to be done by applications using either the CLWB, CLFLUSH, CLFLUSHOPT, Non-Temporal Stores, or WBINVD machine instructions. This functionality still exists with the second generation of persistent memory, but an additional new feature has been added called eADR.

eADR extends the protection from the memory subsystem to the processor caches in the event of a power failure. An NMI routine needs to be initiated to flush the processor caches which can then be followed by an ADR event. Applications using the Persistent Memory Development Kit (PMDK) will detect if eADR is present and do not need to perform flush operations. An SFENCE operation is still required which maintains persistence for globally visible stores. eADR does require that the OEM provide additional stored energy such as a backup battery to specifically allow for this functionality.

Intel® Optane™ persistent memory management tools and resources

Linux* Management Tools for Intel® Optane™ DC Persistent Memory

ndctl - Manage the Linux LIBNVDIMM kernel subsystem.

pmemcheck - Perform a dynamic runtime analysis with an enhanced version of Valgrind.

pmempool - Manage and analyze persistent memory pools with this stand-alone utility.

FIO - Run benchmarks with FIO.

pmembench - Build and run PMDK benchmarks.

Windows* Server manageability is provided by Microsoft* through PowerShell*.

Persistent Memory Development Zone

Intel® Speed Select Technology (Intel® SST)

Often in the cloud service provider and enterprise environments multiple servers are purchased to handle diverse workloads and usages. This can increase total cost of ownership due to power consumption, complexity in management, ensuring all the systems are fully utilized, etc. Intel® Speed select technology is meant to provide a collection of features that help improve this situation by providing a way to prioritize processor attributes.

Two available features on the third generation Intel® Xeon® processor Scalable family include Intel® Speed Select Technology-Turbo Frequency (Intel® SST-TF) and Intel® Speed Select Technology-Core Power (Intel® SST-CP). Their availability is limited to the network focused processor SKUs. The features are discoverable using software tools enabled for Linux.

Intel® Speed Select Technology-Turbo Frequency (Intel® SST-TF)

Intel® SST-TF is a new feature that allows some of the cores to be tagged as high priority providing them with a turbo frequency that exceeds the nominal turbo frequency limits when all cores are active. The overall frequency envelope for the processor socket stays the same but how much frequency a specific core gets can deviate from the specified all core turbo frequency values. All the cores on the processor socket will remain active but the frequency envelope will shift providing higher frequencies to high priority cores, while low priority cores will drop proportionally in frequency to compensate. This feature can be turned on, off or adjusted on a per core basis at runtime.

A potential use case for this feature would be to combine it with Global Extensible Open Power Management (GEOPM). GEOPM is an open source power management runtime and framework that is focused on improving performance and efficiency of HPC workloads. Typically, when an HPC workload schedules a job it will do so in a serialized fashion. Intel® SST can adjust the turbo frequency of that single thread beyond its normal limit to maximum performance even when C-states are disabled on the platform.

Figure 2. Illustration of single high priority core receiving additional turbo frequency when a surplus of turbo frequency is available.

When the workload shifts into parallel hardware threads during the execution phase Intel® SST and GEOPM can also provide a benefit. Invariably some of the parallel threads will be running slower than others, these threads can be given additional turbo frequency as needed.

Similar to GEOPM, Intel® SST-TF technology can be applied and exercised in any asymmetric or heterogenous workload scenario. Intel® SST-TF can also help in traditional cloud environments where certain virtual machines requiring higher service-level agreements can be scheduled to run on Intel® SST-TF enabled high priority cores while the rest of the cores can be scheduled with general purpose virtual machines.

Intel® Speed Select Technology - Core Power (Intel® SST-CP)

Figure 3. Illustration showing an overview of Intel® SST-CP in operation

Intel® SST-CP is the foundation feature for Intel® SST-TF where the concept of prioritization of cores is supported. Intel® SST-CP allows configuring cores into priority groups with each group identified with a specific class of service level characterized by a priority weight, minimum and maximum frequencies. When there is a surplus of processor power/frequency available, the power control unit (PCU) will distribute the surplus amongst the available cores. The surplus of processor power/frequency available is distributed based on the defined weights that have been assigned to the cores. In figure x you can see that the higher priority core receives the surplus frequency first. When Intel® SST-CP is disabled the PCU will simply distribute the surplus of frequency to the various cores without any concern for prioritizing the work being done by a given core.

In order to optimize the result with Intel® SST-CP considerations will need to be made for prioritized cores. For example, spreading out the physical location of high priority cores across NUMA nodes on a processor socket will help to minimize thermal issues. This feature will require workload-based tuning.

Communications based workloads are moving towards more virtual network functions. For use cases like this some of the higher performing virtual functions can end up forming bottlenecks on just a few cores. Intel® SST-CP can help alleviate pressure on the stressed cores and enhance the usage of those virtual network functions.

Enabling Intel® Speed Select Technology

Configuration can be done through third-party orchestration software utilizing the Intel® Speed Select Technology tool that’s part of the latest Linux kernel tools repository. Configuration can also be done by directly interfacing with the hardware exposed register interfaces.

Download scripts for exposing and provisioning Intel® Speed Select Technology features from GitHub.

Below is a list of articles on Intel® Speed Select Technology - Base Frequency (Intel® SST-BF). They cover enabling and configuration examples for different use cases. Even though Intel® SST-BF is not covered here, the family of Intel® Speed Select Technology features follows a similar enabling/configuration flow.

Intel® Speed Select Technology – Base Frequency Priority CPU Management for Open vSwitch* (OVS*)

Intel® Speed Select Technology – Base Frequency - Enhancing Performance

Intel® Speed Select Technology - Base Frequency (Intel® SST-BF) with Kubernetes* - deployment guide

Intel® Speed Select Technology - Base Frequency (Intel® SST-BF) – enabling guide (Bios and OS)

Intel® Virtual RAID on CPU (Intel® VROC)

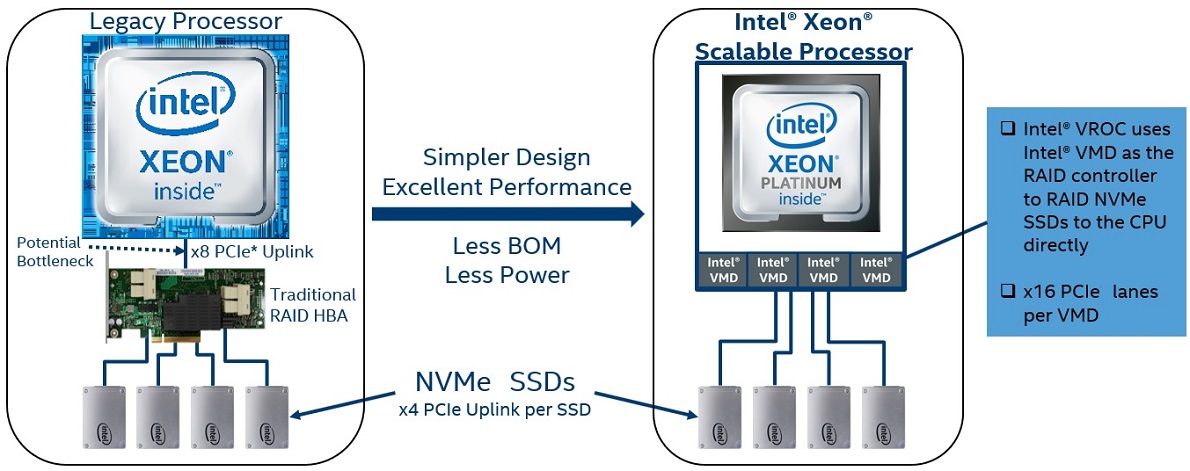

Figure 4. Intel® VROC replaces RAID add-on cards

Intel® VROC is a software solution that integrates with a hardware technology called Intel® Volume Management Device (Intel® VMD) to provide a compelling hybrid RAID solution for NVMe* (Non-Volatile Memory Express*) solid-state drives (SSDs). The CPU has onboard capabilities that work more closely with the chipset to provide quick access to the directly attached NVMe SSDs on the PCIe lanes of the platform. Since Intel® VROC is an integrated RAID solution leveraging technologies within the HW of the platform, features like hot insert and Bootable RAID are available even if the OS doesn’t provide it. This robust NVMe ecosystem with RAID and SSD management capabilities is a compelling alternative to RAID HBAs, therefore helping improve platform BOM costs and better preparing users to move to NVMe SSDs.

Intel® VMD is a technology designed primarily to improve the management of high-speed SSDs. Previously SSDs were attached to a SATA or other interface types and managing them through software or a discrete HBA was acceptable. When the industry moves toward faster NVMe SSDs over a PCIe interface in order to improve bandwidth, the discrete HBA adds delays and bottlenecks while a pure software solution is incomplete for most enterprise users. Intel® VMD with Intel® VROC uses hardware and software together to mitigate these issues.

Intel® VMD and Intel® VROC have been around since the first generation of Intel® Xeon® Scalable processors. Additional information can be found on the Intel® VROC website or the Intel® VROC support site.

New Deep Learning Instruction - Brain Floating Point Format (BFLOAT16)

Intel® Deep Learning Boost (Intel® DL Boost) was introduced on the previous generation of silicon to help with improving the performance of the inference aspect of AI workloads. Bfloat16 is a new instruction that is targeted at improving the performance of the training aspect for AI workloads. The instruction is part of Intel® Advanced Vector Extensions 512 (Intel® AVX-512) including the AVX-512F, AVX-512VL and AVX-512_BF16 instruction sets. Software developers whose applications are already working with the foundation set will find very little enabling is needed. The Intel® Deep Neural Network Library (Intel® DNNL) allows for integration and optimization into many popular AI frameworks such as Tensorflow, apache MXnet, etc. The Intel® oneAPI Deep Neural Network Library (oneDNN) also supports BF16.

Figure 5. Comparison between floating point 32, floating point 16 and Bfloat16 instructions.

In figure 5 you can see a comparison between the different floating point instructions. You will notice that you can execute 2x floating point 16 (FP16) instructions in the same amount of time as a single floating point 32 (FP32) instruction. Bfloat16 tries to give you the best of both FP16 and FP32. It provides the throughput of FP16 with a similar dynamic range and performance of FP32. Range tends to be more important than precision for AI applications. To achieve good numerical behavior at an application level it is essential to accumulate to FP32. An example of this is when you multiply two BFP16 using the vdpbf16ps instruction. More information about BFP16 instructions can be found in the Intel® Architecture Instruction Set Extensions and Future Features Programming Reference.

Additional Bfloat16 Resources

Code Sample: Intel® Deep Learning Boost New Deep Learning Instruction bfloat16 - Intrinsic Functions

Lower Numerical Precision Deep Learning Inference and Training

Whitepaper: BFLOAT16 – Hardware Numerics Definition

Leveraging the bfloat16 Artificial Intelligence Datatype For Higher-Precision Computations

Intel® Platform Firmware Resilience (Intel® PFR)

Intel® Platform Resilience is designed to protect, detect and correct against security threats such as permanent denial of service attacks. In a PDOS, the hardware is attacked with the intent to render the system permanently inoperable, such as by corrupting the system firmware in a manner that is not recoverable. This is a growing threat against critical infrastructure systems such as those associated with the power grid, banks, and other utilities.

Intel® PFR uses a built in Intel® MAX® 10 FPGA to improve protection against security threats. The FPGA along with soft-IP is used as the primary root of trust. The soft-IP enables visibility and flexibility in the design allowing for optional customizations to deal with changes in hardware, firmware or customer needs. This flexibility is of value for example when switching to a different bios chip manufacturer. The FPGA helps protect the firmware by attesting that it is safe prior to executing the code. It also engages in boot and runtime monitoring to assure the server is running known good firmware for various aspects of the system such as the BIOS, BMC, Intel ME, SPI Descriptor and the firmware on the power supply. One of the more interesting aspects is that the FPGA can provide for an automated recovery if corrupted firmware is detected, which previously required manual intervention.

Intel® PFR meets the NIST 800-193 specification for firmware resiliency. Support for the feature is included in the Intel® Security Libraries for Data Center (Intel® SecL - DC).

Intel® Security Libraries for Data Center (Intel® SecL - DC)

Intel® SecL - DC consists of software components providing end-to-end cloud security solutions with integrated libraries. Users have the flexibility to either develop their customized security solutions with the provided libraries or deploy the software components in their existing infrastructure.

There are new security features that are supported in Intel® SecL - DC. Through the Platform Control Registers (PCR) within the Trusted Platform Module (TPM), the remote attestation functionality can be extended to include files and folders on a Linux host system and are included in determining the host’s overall trust status. Lastly, virtual machines and Docker container images can be encrypted at rest, with key access tied to platform integrity attestation. Because security attributes contained in the platform integrity attestation report are used to control access to the decryption keys, this feature provides protection for at-rest data, IP, code, in Docker container or virtual machine images.

The Author: David Mulnix is a software engineer and has been with Intel Corporation for over 25 years. His areas of focus include software automation, server power, performance analysis, and he was one of the contributors of the Server Efficiency Rating Tool™.

Contributors: Andy Rudoff, Kartik Ananth and Vasudevan Srinivasan.